Awesome

Heroku CLIP

Deploy OpenAI's CLIP on Heroku.

Usage

heroku login

heroku create

git push heroku main

If you have already deployed an app, then do not create it again after you have cloned the repo. Follow this instruction to add your remote instead. Typically, for myself:

heroku git:remote -a dry-taiga-80279

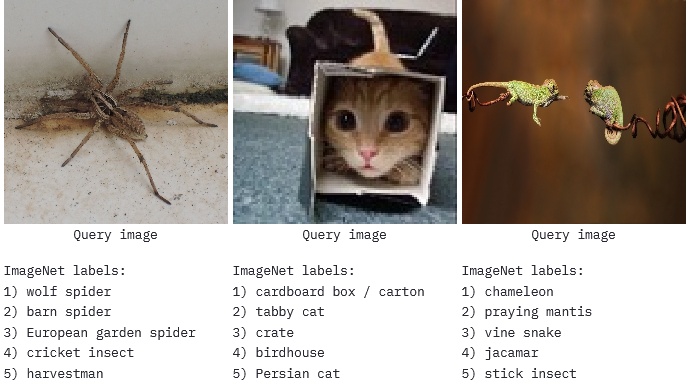

Results

Try the app on Heroku:

- upload an image,

- it will be resized to 224x224,

- a prediction of the top-5 ImageNet labels (out of 1000) will be displayed.

If you want to try another image, make sure to first click on the red "X" to close the previous one:

Recommendations

First, to avoid using too much memory on Heroku:

- ensure you don't store many variables and models in the global scope, use functions!

- resize the input image, e.g. to 224x224 which is the resolution expected by CLIP anyway,

Otherwise, you will notice in logs that the app uses too much memory, or worse, crashes because of it:

Process running mem=834M(162.9%) Error R14 (Memory quota exceeded)

Second, the app is slow because it does not have access to a GPU on Heroku.

If a REST API is not required, I would recommend to use Colab instead:

- the notebook can be user-friendly,

- memory is less constrained,

- a GPU will be available for free.

References

- Open AI's CLIP (Contrastive Language-Image Pre-Training):

- My usage of CLIP:

steam-CLIP: retrieve games with similar banners, using OpenAI's CLIP (resolution 224),heroku-flask-api: serve the matching results through an API built with Flask on Heroku,heroku-clip: deploy CLIP on Heroku.

- Examples of interactive applications:

- using Streamlit:

ternaus/retinaface_demo- caveat: there is no GPU because of deployment to Heroku,

- to use a GPU, you will need to install PyTorch, then run the app locally,

- using Flask:

matsui528/sis- caveat: a priori no GPU for the same reason as with Streamlit,

- to use a GPU, you will need to install Tensorflow 2, then run the app locally,

- using Node.js:

rom1504/image_embeddings- caveat:

- embedding has to be pre-computed and downloaded by the user,

- the user won't be able to compute the embedding for any image query on the fly,

- trivia:

- 4.88 MB .npy for 1000 vectors of dimension 1280,

- potentially up to 60 MB .npy for 30k vectors of dimension 512.

- caveat:

- using Colab:

tg-bomze/Face-Depixelizer- caveat: the user has to log in with a Google account,

- there is access to a free GPU.

- using Streamlit: