Awesome

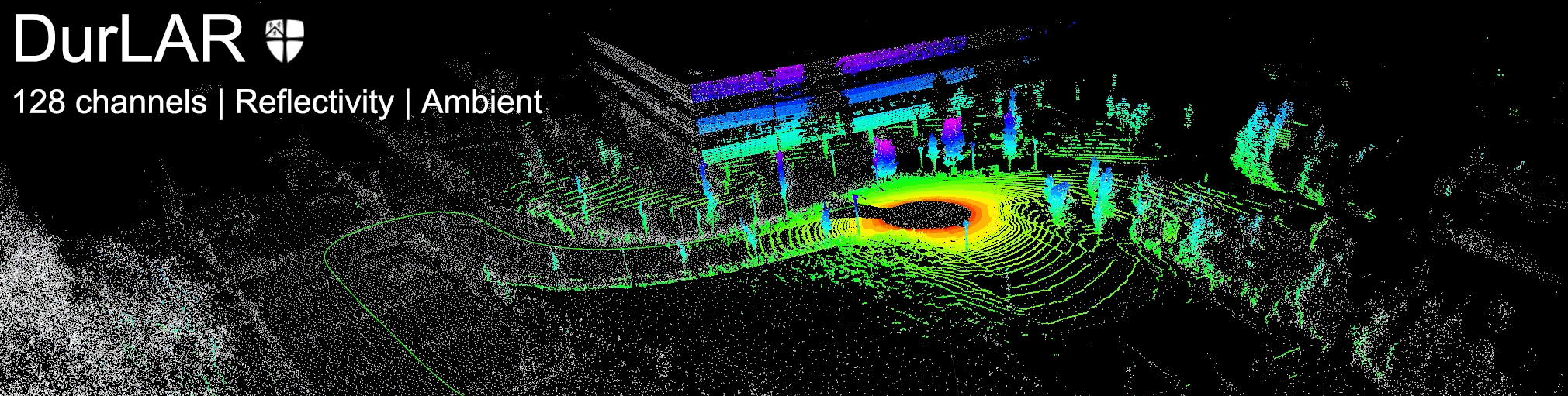

DurLAR: A High-Fidelity 128-Channel LiDAR Dataset

<!--  -->https://github.com/l1997i/DurLAR/assets/35445094/2c6d4056-a6de-4fad-9576-693efe2860f0

News

- [2024/12/05] We provide the intrinsic parameters of our OS1-128 LiDAR [download].

- [2024/09/07] We provide the OneDrive link [download] for downloading the DurLAR dataset. To access the OneDrive link, please email your affiliation, name, and intended usage to i@luisli.org.

Sensor placement

-

LiDAR: Ouster OS1-128 LiDAR sensor with 128 channels vertical resolution

-

Stereo Camera: Carnegie Robotics MultiSense S21 stereo camera with grayscale, colour, and IR enhanced imagers, 2048x1088 @ 2MP resolution

-

GNSS/INS: OxTS RT3000v3 global navigation satellite and inertial navigation system, supporting localization from GPS, GLONASS, BeiDou, Galileo, PPP and SBAS constellations

-

Lux Meter: Yocto Light V3, a USB ambient light sensor (lux meter), measuring ambient light up to 100,000 lux

Panoramic Imagery

<br> <p align="center"> <img src="https://github.com/l1997i/DurLAR/blob/main/reflect_center.gif?raw=true" width="100%"/> <h5 id="title" align="center">Reflectivity imagery</h5> </br> <br> <p align="center"> <img src="https://github.com/l1997i/DurLAR/blob/main/ambient_center.gif?raw=true" width="100%"/> <h5 id="title" align="center">Ambient imagery</h5> </br>File Description

Each file contains 8 topics for each frame in DurLAR dataset,

ambient/: panoramic ambient imageryreflec/: panoramic reflectivity imageryimage_01/: right camera (grayscale+synced+rectified)image_02/: left RGB camera (synced+rectified)ouster_points: ouster LiDAR point cloud (KITTI-compatible binary format)gps,imu,lux: csv file format

The structure of the provided DurLAR full dataset zip file,

DurLAR_<date>/

├── ambient/

│ ├── data/

│ │ └── <frame_number.png> [ ..... ]

│ └── timestamp.txt

├── gps/

│ └── data.csv

├── image_01/

│ ├── data/

│ │ └── <frame_number.png> [ ..... ]

│ └── timestamp.txt

├── image_02/

│ ├── data/

│ │ └── <frame_number.png> [ ..... ]

│ └── timestamp.txt

├── imu/

│ └── data.csv

├── lux/

│ └── data.csv

├── ouster_points/

│ ├── data/

│ │ └── <frame_number.bin> [ ..... ]

│ └── timestamp.txt

├── reflec/

│ ├── data/

│ │ └── <frame_number.png> [ ..... ]

│ └── timestamp.txt

└── readme.md [ this README file ]

The structure of the provided calibration zip file,

DurLAR_calibs/

├── calib_cam_to_cam.txt [ Camera to camera calibration results ]

├── calib_imu_to_lidar.txt [ IMU to LiDAR calibration results ]

└── calib_lidar_to_cam.txt [ LiDAR to camera calibration results ]

Get Started

- Download the calibration files

- Download the calibration files (v2, targetless)

- Download the exemplar ROS bag (for targetless calibration)

- Download the exemplar dataset (600 frames)

- Download the full dataset (Fill in the form to request access to the full dataset)

Note that we did not include CSV header information in the exemplar dataset (600 frames). You can refer to Header of csv files to get the first line of the

csvfiles.

calibration files (v2, targetless): Following the publication of the proposed DurLAR dataset and the corresponding paper, we identify a more advanced targetless calibration method (#4) that surpasses the LiDAR-camera calibration technique previously employed. We provide exemplar ROS bag for targetless calibration, and also corresponding calibration results (v2). Please refer to Appendix (arXiv) for more details.

Access to the full dataset

Access to the complete DurLAR dataset can be requested through one of the following ways. 您可任选以下其中任意链接申请访问完整数据集。

1. Access for the full dataset

3. OneDrive link. If you need access to the DurLAR dataset, please send your affiliation, name, and intended usage to i@luisli.org, and we will provide the access password.

Usage of the downloading script

Upon completion of the form, the download script durlar_download and accompanying instructions will be automatically provided. The DurLAR dataset can then be downloaded via the command line.

For the first use, it is highly likely that the durlar_download file will need to be made

executable:

chmod +x durlar_download

By default, this script downloads the small subset for simple testing. Use the following command:

./durlar_download

It is also possible to select and download various test drives:

usage: ./durlar_download [dataset_sample_size] [drive]

dataset_sample_size = [ small | medium | full ]

drive = 1 ... 5

Given the substantial size of the DurLAR dataset, please download the complete dataset only when necessary:

./durlar_download full 5

Throughout the entire download process, it is important that your network remains stable and free from any interruptions. In the event of network issues, please delete all DurLAR dataset folders and rerun the download script. Currently, our script supports only Ubuntu (tested on Ubuntu 18.04 and Ubuntu 20.04, amd64). For downloading the DurLAR dataset on other operating systems, please refer to Durham Collections for instructions.

CSV format for imu, gps, and lux topics

Format description

Our imu, gps, and lux data are all in CSV format. The first row of the CSV file contains headers that describe the meaning of each column. Taking imu csv file for example (only the first 9 rows are displayed),

%time: Timestamps in Unix epoch format.field.header.seq: Sequence numbers.field.header.stamp: Header timestamps.field.header.frame_id: Frame of reference, labeled as "gps".field.orientation.x: X-component of the orientation quaternion.field.orientation.y: Y-component of the orientation quaternion.field.orientation.z: Z-component of the orientation quaternion.field.orientation.w: W-component of the orientation quaternion.field.orientation_covariance0: Covariance of the orientation data.

Header of csv files

The first line of the csv files is shown as follows.

For the GPS,

time,field.header.seq,field.header.stamp,field.header.frame_id,field.status.status,field.status.service,field.latitude,field.longitude,field.altitude,field.position_covariance0,field.position_covariance1,field.position_covariance2,field.position_covariance3,field.position_covariance4,field.position_covariance5,field.position_covariance6,field.position_covariance7,field.position_covariance8,field.position_covariance_type

For the IMU,

time,field.header.seq,field.header.stamp,field.header.frame_id,field.orientation.x,field.orientation.y,field.orientation.z,field.orientation.w,field.orientation_covariance0,field.orientation_covariance1,field.orientation_covariance2,field.orientation_covariance3,field.orientation_covariance4,field.orientation_covariance5,field.orientation_covariance6,field.orientation_covariance7,field.orientation_covariance8,field.angular_velocity.x,field.angular_velocity.y,field.angular_velocity.z,field.angular_velocity_covariance0,field.angular_velocity_covariance1,field.angular_velocity_covariance2,field.angular_velocity_covariance3,field.angular_velocity_covariance4,field.angular_velocity_covariance5,field.angular_velocity_covariance6,field.angular_velocity_covariance7,field.angular_velocity_covariance8,field.linear_acceleration.x,field.linear_acceleration.y,field.linear_acceleration.z,field.linear_acceleration_covariance0,field.linear_acceleration_covariance1,field.linear_acceleration_covariance2,field.linear_acceleration_covariance3,field.linear_acceleration_covariance4,field.linear_acceleration_covariance5,field.linear_acceleration_covariance6,field.linear_acceleration_covariance7,field.linear_acceleration_covariance8

For the LUX,

time,field.header.seq,field.header.stamp,field.header.frame_id,field.illuminance,field.variance

To process the csv files

To process the csv files, you can use multiple ways. For example,

Python: Use the pandas library to read the CSV file with the following code:

import pandas as pd

df = pd.read_csv('data.csv')

print(df)

Text Editors: Simple text editors like Notepad (Windows) or TextEdit (Mac) can also open CSV files, though they are less suited for data analysis.

Folder #Frame Verification

For easy verification of folder data and integrity, we provide the number of frames in each drive folder, as well as the MD5 checksums of the zip files.

| Folder | # of Frames |

|---|---|

| 20210716 | 41993 |

| 20210901 | 23347 |

| 20211012 | 28642 |

| 20211208 | 26850 |

| 20211209 | 25079 |

| total | 145911 |

Intrinsic Parameters of Our Ouster OS1-128 LiDAR

The intrinsic JSON file of our LiDAR can be downloaded at this link. For more information, visit the official user manual of OS1-128.

Please note that sensitive information, such as the serial number and unique device ID, has been redacted (indicated as XXXXXXX).

Reference

If you are making use of this work in any way (including our dataset and toolkits), you must please reference the following paper in any report, publication, presentation, software release or any other associated materials:

DurLAR: A High-fidelity 128-channel LiDAR Dataset with Panoramic Ambient and Reflectivity Imagery for Multi-modal Autonomous Driving Applications (Li Li, Khalid N. Ismail, Hubert P. H. Shum and Toby P. Breckon), In Int. Conf. 3D Vision, 2021. [pdf] [video][poster]

@inproceedings{li21durlar,

author = {Li, L. and Ismail, K.N. and Shum, H.P.H. and Breckon, T.P.},

title = {DurLAR: A High-fidelity 128-channel LiDAR Dataset with Panoramic Ambient and Reflectivity Imagery for Multi-modal Autonomous Driving Applications},

booktitle = {Proc. Int. Conf. on 3D Vision},

year = {2021},

month = {December},

publisher = {IEEE},

keywords = {autonomous driving, dataset, high-resolution LiDAR, flash LiDAR, ground truth depth, dense depth, monocular depth estimation, stereo vision, 3D},

category = {automotive 3Dvision},

}