Awesome

CropMask_RCNN is a project to train and deploy instance segmentation models for mapping fallow and irrigated center pivot agriculture from multispectral satellite imagery. The primary goal is to develop a model or set of models that can map center pivot agriculture with high precision and recall across different dryland agriculture regions. The main strategy I'm using relies on transfer learning from COCO before finetuning on Landsat tiles from multiple cloud-free scenes acquired in Nebraska during the 2005 growing season (late May - early October). This time period coincides with the labeled dataset described below. It extends matterport's module , which is an implementation of Mask R-CNN on Python 3, Keras, and TensorFlow. CropMask_RCNN work with multispectral Landsat satellite imagery, contains infrastructure-as-code via terraform to build a GPU enabled Azure Data Science VM (from Andreus Affenhaeuser's guide), and a REST API for submitting Landsat geotiff imagery for center pivot detection.

See matterport's mrcnn repo for an explanation of the Mask R-CNN architecture and a general guide to notebook tutorials and notebooks for inspecting model inputs and outputs.

For an overview of the project in poster form, see this poster I presented at the Fall 2018 Meeting on Center Pivot Crop Water Use.

Nebraska Results

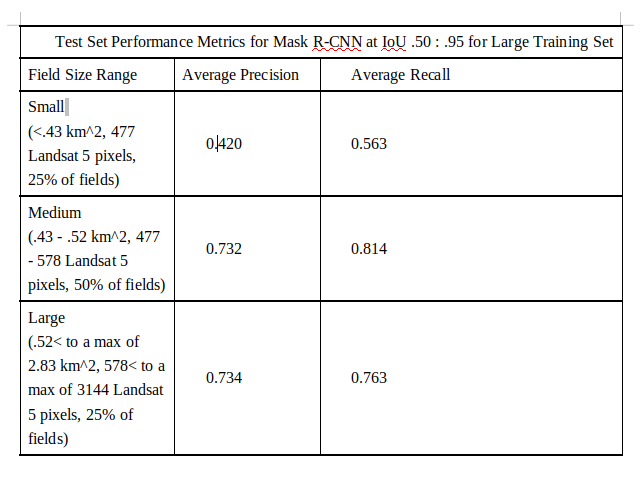

This is a non exhaustive overview of models and configurations trained and validated on Landsat Analysis Ready Data and evaluated on held out Landsat ARD tiles. Numbers reported include both mAP@IoU=0.50:0.95 and mAP@IoU=.5 values.

Unless otherwise noted, all models are fine-tuned from Resnet-50 COCO weights,

using 4 NVIDIA V100s with 3 512x512 int16 images per GPU. Entries are reported in chronological order from when they were tested from top to bottom. Most defaults in cropmask.mrcnn.configs are kept, with changes tracked in cropmask.model_configs. I try to note substantial changes to configs that led to improvements or worse performance in this table.

The results for the detectron2-based implementation in this repo are detailed in my [thesis]((https://github.com/ecohydro/CropMask_RCNN/blob/master/test-figs/thesis.pdf):

These old results were some low perfomring models with matterport's MaskRCNN.

| Backbone | mAP@IoU=0.50:0.95<br/>(mask) | mAP@IoU=.5 <br/> (mask) | Time <br/>(on 4 V100s) | Configurations <br/> (click to expand) |

|---|---|---|---|---|

| 0.188 | 0.269 | 2h | <details><summary>super quick</summary> TRAIN_ROIS_PER_IMAGE was set too high, 600 vs 300. According to matterport's MaskRCNN wiki, it's best to aim for 33% psotive ROIs per tile, which you can gauge by looking at the distribution of field counts per tile in the inspect_data notebook. Too many ROIs generated led to false positives and false negatives. Next run was better after correcting this. | |

| 0.198 | 0.297 | 1.5h | <details><summary>standard</summary>changed TRAIN_ROIS_PER_IMAGE to 300, same configs as above model. </details> |

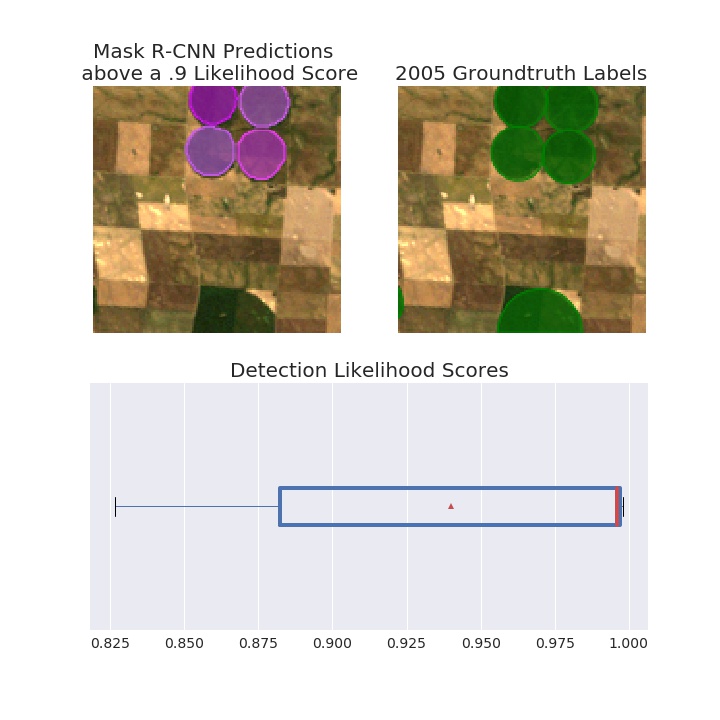

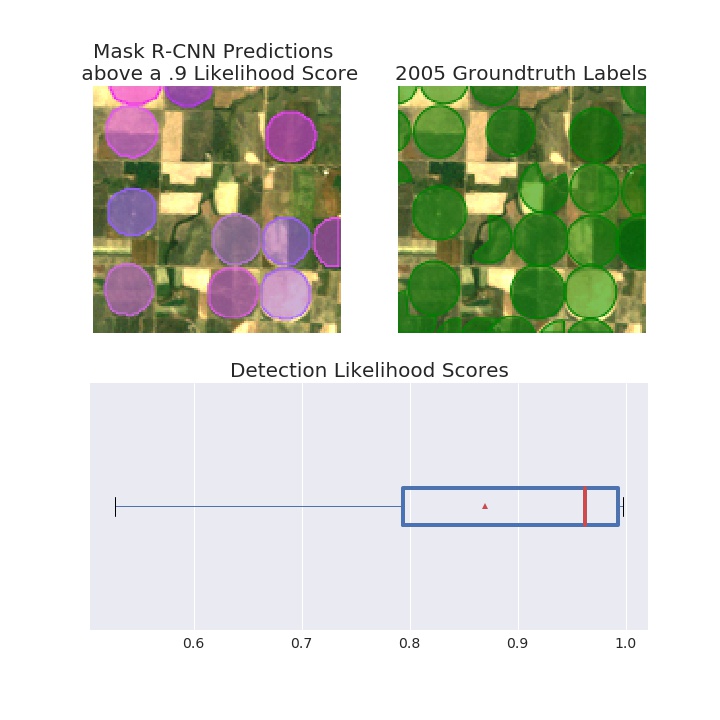

Some example results on test set images