Awesome

OptimizeMVS

Created by Yi Wei, Shaohui Liu and Wang Zhao from Tsinghua University.

Introduction

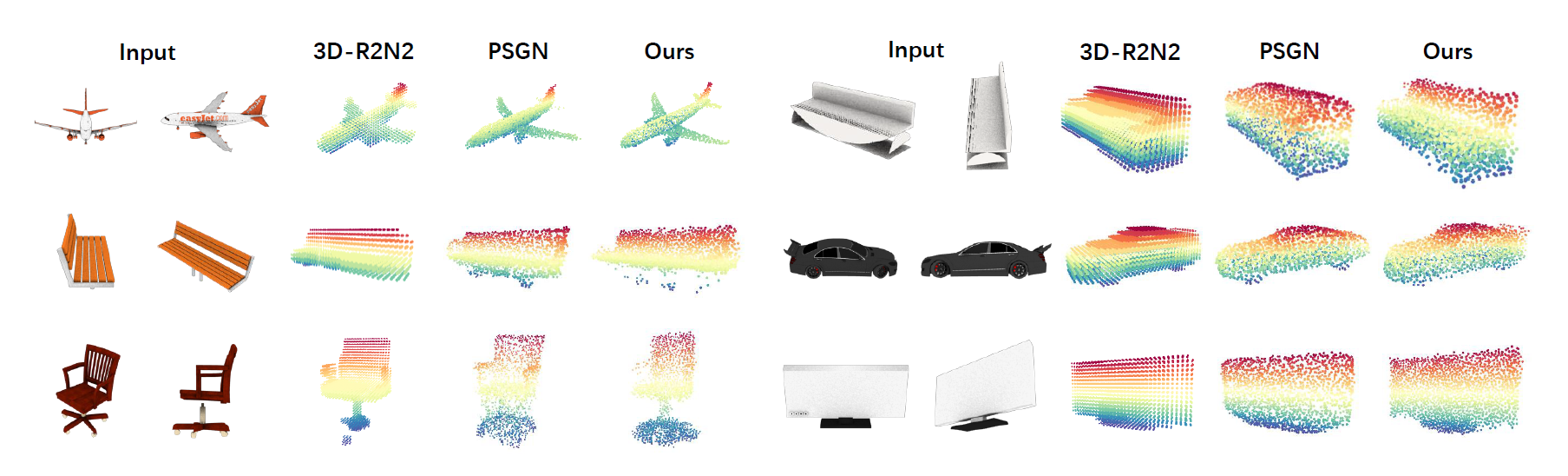

This repository contains source code for Conditional Single-view Shape Generation for Multi-view Stereo Reconstruction in tensorflow.

Installation

The code has been tested with Python 2.7, tensorflow 1.3.0 on Ubuntu 16.04.

1. Clone code

git clone https://github.com/weiyithu/OptimizeMVS.git

2. Install packages

Python virtual environment is recommended.

cd OptimizeMVS

virtualenv env

source ./env/bin/activate

pip install -r requirements.txt

You need to compile the external libraries:

sh compile.sh

Usage

1. One-button setup

sh init_data.sh

2. Two-stage training

sh train.sh

Default training options are stored in the config folder.

3. Evaluation

You can download our pretrained model: single-category, multi-category and move it to a folder named demo for evaluation.

sh download_trained_model.sh

To evaluate your own model, set the 'load' option in test.sh as the path to your model.

sh test.sh

The results might be slightly better than reported in the paper.

Acknowledgements

Part of the external operators are borrowed from latent_3d_points and PointNet++. The multi-view images were rendered from ShapeNetCore with the preprocessing scripts in mvcSnP and the point cloud data was from latent_3d_points. We sincerely thank the authors for their kind help.

This work was supported in part by the National Natural Science Foundation of China under Grant U1813218, Grant 61822603, Grant U1713214, Grant 61672306, and Grant 61572271.

Citation

If you find this work useful in your research, please consider citing:

@inproceedings{wei2019conditional,

author = {Wei, Yi and Liu, Shaohui and Zhao, Wang and Lu, Jiwen and Zhou, Jie},

title = {Conditional Single-view Shape Generation for Multi-view Stereo Reconstruction},

booktitle = {Proceedings of IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

year = {2019}

}

The first three authors share equal contributions.