Awesome

DeepBedMap [paper] [poster] [presentation]

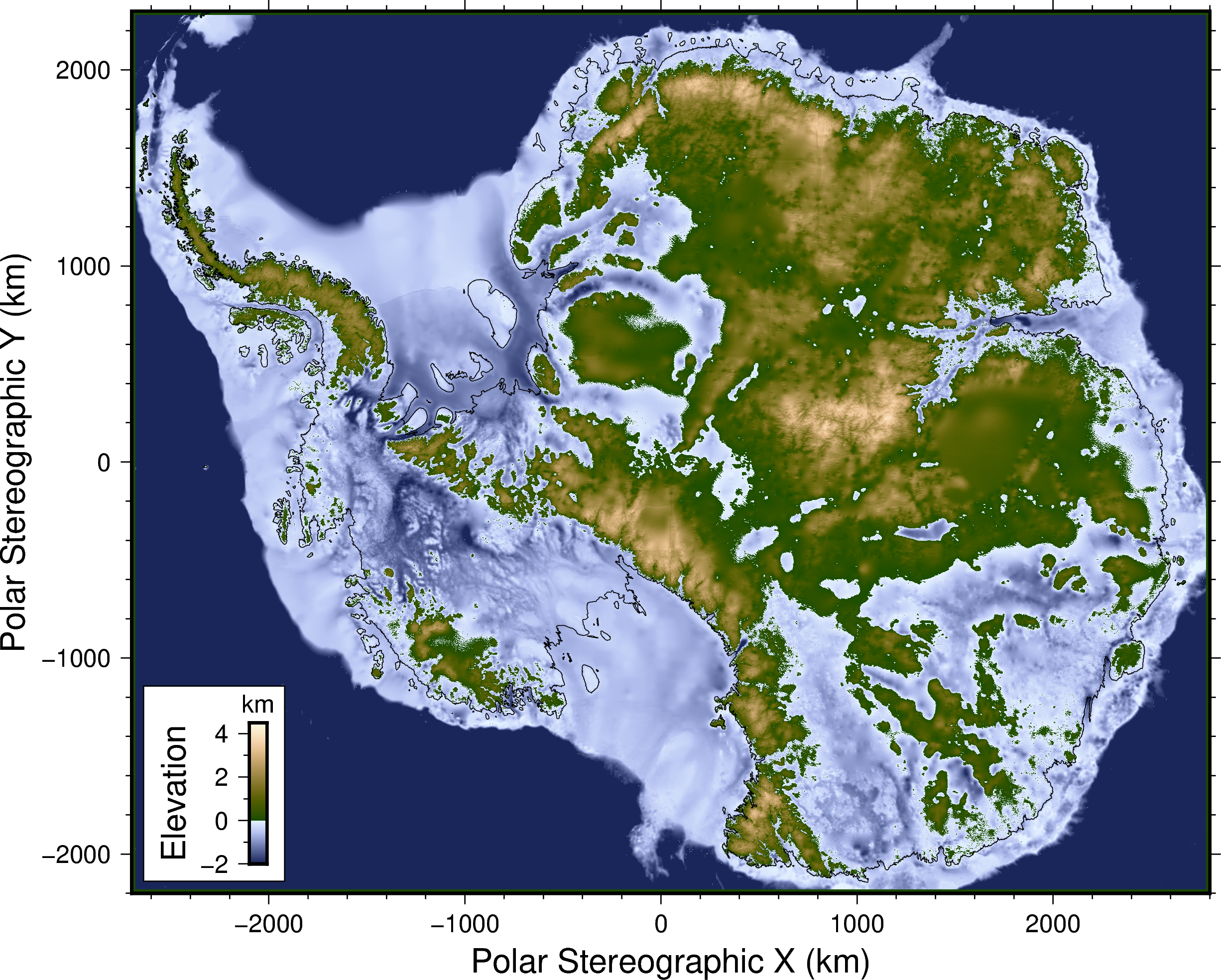

Going beyond BEDMAP2 using a super resolution deep neural network. Also a convenient flat file data repository for high resolution bed elevation datasets around Antarctica.

deepbedmap/

├── features/ (files describing the high level behaviour of various features)

│ ├── *.feature... (easily understandable specifications written using the Given-When-Then gherkin language)

│ └── README.md (markdown information on the feature files)

├── highres/ (contains high resolution localized DEMs)

│ ├── *.txt/csv/grd/xyz... (input vector file containing the point-based bed elevation data)

│ ├── *.json (the pipeline file used to process the xyz point data)

│ ├── *.nc (output raster netcdf files)

│ └── README.md (markdown information on highres data sources)

├── lowres/ (contains low resolution whole-continent DEMs)

│ ├── bedmap2_bed.tif (the low resolution DEM!)

│ └── README.md (markdown information on lowres data sources)

├── misc/ (miscellaneous raster datasets)

│ ├── *.tif (Surface DEMs, Ice Flow Velocity, etc. See list in Issue #9)

│ └── README.md (markdown information on miscellaneous data sources)

├── model/ (*hidden in git, neural network model related files)

│ ├── train/ (a place to store the raster tile bounds and model training data)

│ └── weights/ (contains the neural network model's architecture and weights)

├── .env (environment variable config file used by pipenv)

├── .<something>ignore (files ignored by a particular piece of software)

├── .<something else> (stuff to make the code in this repo look and run nicely e.g. linters, CI/CD config files, etc)

├── Dockerfile (set of commands to fully reproduce the software stack here into a docker image, used by binder)

├── LICENSE.md (the license covering this repository)

├── Pipfile (what you want, the summary list of core python dependencies)

├── Pipfile.lock (what you need, all the pinned python dependencies for full reproducibility)

├── README.md (the markdown file you're reading now)

├── data_list.yml (human and machine readable list of the datasets and their metadata)

├── data_prep.ipynb/py (paired jupyter notebook/python script that prepares the data)

├── deepbedmap.ipynb/py (paired jupyter notebook/python script that predicts an Antarctic bed digital elevation model)

├── environment.yml (conda binary packages to install)

├── paper_figures.ipynb/py (paired jupyter notebook/python script to produce figures for DeepBedMap paper

├── srgan_train.ipynb/py (paired jupyter notebook/python script that trains the ESRGAN neural network model)

└── test_ipynb.ipynb/py (paired jupyter notebook/python script that runs doctests in the other jupyter notebooks!)

Getting started

Quickstart

Launch in Binder (Interactive jupyter notebook/lab environment in the cloud).

Installation

Start by cloning this repo-url

git clone <repo-url>

Then I recommend using conda to install the non-python binaries (e.g. GMT, CUDA, etc). The conda virtual environment will also be created with Python and pipenv installed.

cd deepbedmap

conda env create -f environment.yml

Activate the conda environment first.

conda activate deepbedmap

Then set some environment variables before using pipenv to install the necessary python libraries,

otherwise you may encounter some problems (see Common problems below).

You may want to ensure that which pipenv returns something similar to ~/.conda/envs/deepbedmap/bin/pipenv.

export HDF5_DIR=$CONDA_PREFIX/

export LD_LIBRARY_PATH=$CONDA_PREFIX/lib/

pipenv install --python $CONDA_PREFIX/bin/python --dev

#or just

HDF5_DIR=$CONDA_PREFIX/ LD_LIBRARY_PATH=$CONDA_PREFIX/lib/ pipenv install --python $CONDA_PREFIX/bin/python --dev

Finally, double-check that the libraries have been installed.

pipenv graph

Syncing/Updating to new dependencies

conda env update -f environment.yml

pipenv sync --dev

Common problems

Note that the .env file stores some environment variables.

So if you run conda activate deepbedmap followed by some other command and get an ...error while loading shared libraries: libpython3.7m.so.1.0...,

you may need to run pipenv shell or do pipenv run <cmd> to have those environment variables registered properly.

Or just run this first:

export LD_LIBRARY_PATH=$CONDA_PREFIX/lib/

Also, if you get a problem when using pipenv to install netcdf4, make sure you have done:

export HDF5_DIR=$CONDA_PREFIX/

and then you can try using pipenv install or pipenv sync again.

See also this issue for more information.

Running jupyter lab

conda activate deepbedmap

pipenv shell

python -m ipykernel install --user --name deepbedmap #to install conda env properly

jupyter kernelspec list --json #see if kernel is installed

jupyter lab &

Citing

The paper is published at The Cryosphere and can be referred to using the following BibTeX code:

@Article{tc-14-3687-2020,

AUTHOR = {Leong, W. J. and Horgan, H. J.},

TITLE = {DeepBedMap: a deep neural network for resolving the bed topography of Antarctica},

JOURNAL = {The Cryosphere},

VOLUME = {14},

YEAR = {2020},

NUMBER = {11},

PAGES = {3687--3705},

URL = {https://tc.copernicus.org/articles/14/3687/2020/},

DOI = {10.5194/tc-14-3687-2020}

}

The DeepBedMap_DEM v1.1.0 dataset is available from Zenodo at https://doi.org/10.5281/zenodo.4054246. Neural network model training experiment runs are also recorded at https://www.comet.ml/weiji14/deepbedmap. Python code for the DeepBedMap model here on Github is also mirrored on Zenodo at https://doi.org/10.5281/zenodo.3752613.