Awesome

Android Antimalware

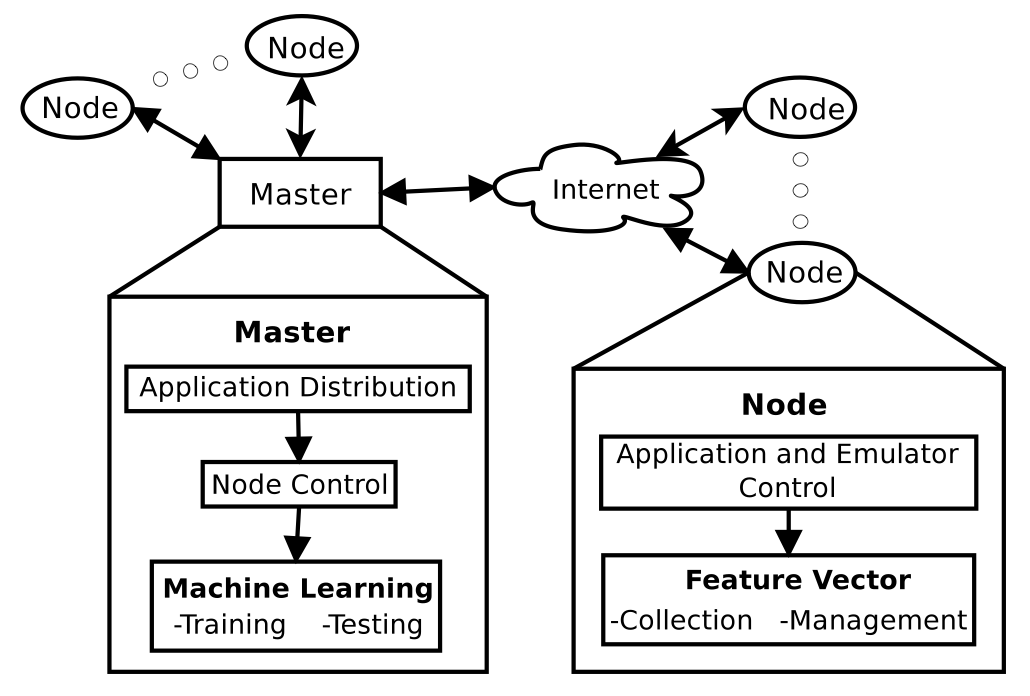

An important concern on the growing Android platform is malware detection. Malware detection techniques on the Android platform are similar to techniques used on any platform. Detection is fundamentally broken into static analysis, by analyzing a compiled file; dynamic analysis, by analyzing the runtime behavior, such as battery, memory, and network utilization of the device; or hybrid analysis, by combining static and dynamic techniques. Static analysis is advantageous on memory-limited Android devices because the malware is not executed, only analyzed. However, dynamic analysis provides additional protection, particularly against polymorphic malware that change form during execution. This project provides the STREAM framework to profile applications to obtain feature vectors for dynamic analysis.

This work was presented at the

International Wireless Communications and Mobile Computing Conference (IWCMC) 2013, and the paper is available here.

The feature vectors and classifiers are available for further

analysis in the Results/IWCMC-2013 directory.

As projects mature, design decisions are tested, and the design decision of using shell scripts as a framework does not deliver a reliable control mechanism of error-prone emulators on a distributed system. Therefore, this project has been deprecated and remains online for historical archiving. We are actively designing a new framework in Scala.

Experiment: Malware Classifier Performance

STREAM resides on the Android Tactical Application Assessment & Knowledge (ATAACK) Cloud, which is a hardware platform designed to provide a testbed for cloud– based analysis of mobile applications. The ATAACK cloud currently uses a 34 node cluster, with each cluster machine containing Dell PowerEdge M610 blade running CentOS 6.3. Each node has 2 Intel Xeon 564 processors with 12 cores each along with 36GB of DDR3 ECC memory.

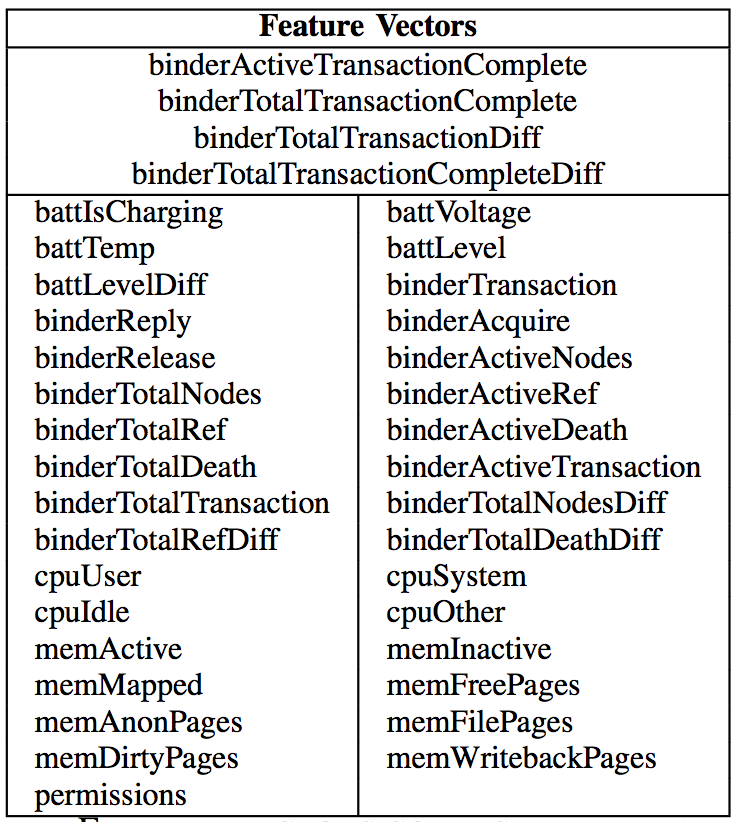

We used STREAM to send 10,000 input events to each application in the data set and collect a feature vector every 5 seconds. We collected the following set of features.

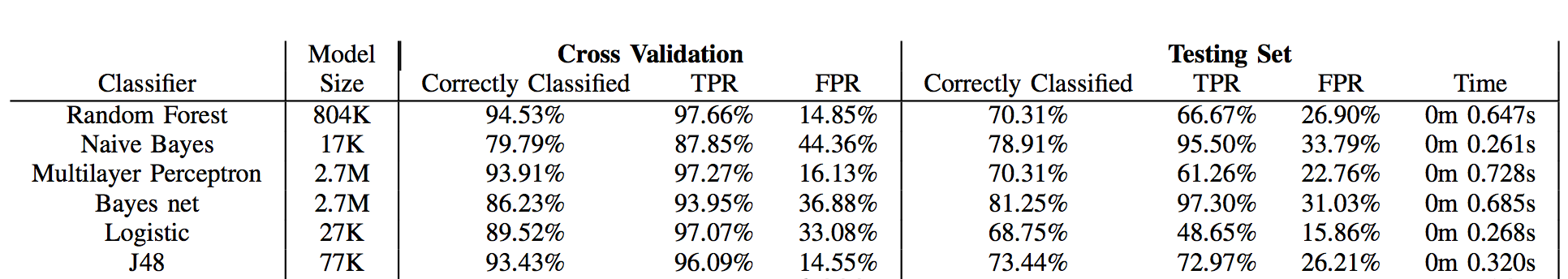

Feature vectors collected from the training set of applications were used to create classifiers, and then feature vectors from the testing set are used to evaluate the created malware classifiers. Classification rates from the testing set are based on the 47 testing applications used. Future work includes increasing the testing set size to increase confidence in these results.

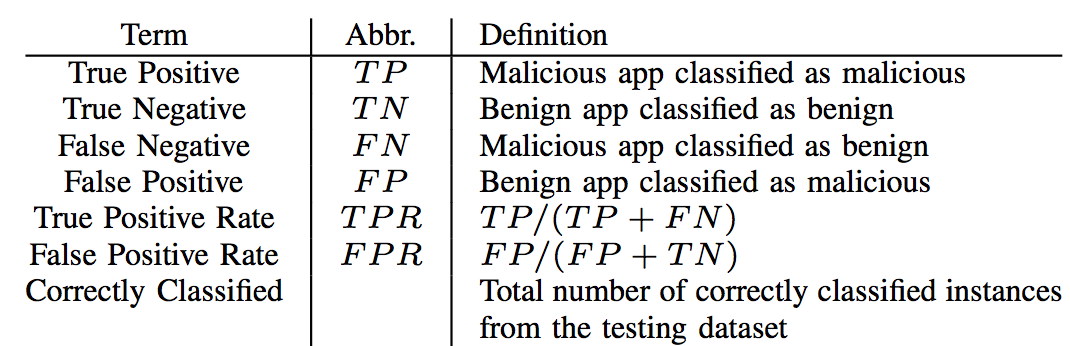

The following table shows descriptions of the metrics used to evaluate classifiers.

The overall results of training and testing six machine learning algorithms with STREAM are shown in the following table.

There is a clear difference in correct classification percentage of the cross validation set (made up of applications used in training) versus the testing set (made up of applications never used in training). Feature vectors from the training set are classified quite well, typically over 85% correct, whereas new feature vectors from the testing set are often only classified 70% correctly. Classifier performance cannot be based on cross validation solely, as it is prone to inflated accuracy results.

Using the code.

Add and label APK files.

Populate TestSuite/Training and/or TestSuite/Testing with APK files

with the naming format <M/B><Number>-<Name>.apk. Where

<M/B>represents the classification of the application (malicious or benign)<Number>represents an optional, arbitrary number to help with identification and sorting of different types of applications, and<Name>is the name or identifier of the application.- An example would be

B001-FooApp.apk.

Configure emulators and devices.

Set the devices and number of emulators in TestSuite/collect-data.sh.

Collect feature vectors.

Run collect-data.sh <Testing/Training> to start profiling applications

and collecting feature vectors.

Feature vectors will be saved to arff/ and the machine learning

classifiers will be accordingly trained and tested with arff/weka.sh