Awesome

tensorflow seq2seq chatbot

Note: the repository is not maintained. Feel free to PM me if you'd like to take up the maintainance.

Build a general-purpose conversational chatbot based on a hot seq2seq approach implemented in tensorflow. Since it doesn't produce good results so far, also consider other implementations of seq2seq.

The current results are pretty lousy:

hello baby - hello

how old are you ? - twenty .

i am lonely - i am not

nice - you ' re not going to be okay .

so rude - i ' m sorry .

Disclaimer:

- the answers are hand-picked (it looks cooler that way)

- chatbot has no power to follow the conversation line so far; in the example above it's a just a coincidence (hand-picked one)

Everyone is welcome to investigate the code and suggest the improvements.

Actual deeds

- realise how to diversify chatbot answers (currently the most probable one is picked and it's dull)

Papers

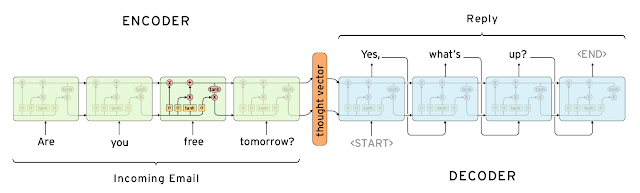

Nice picture

Curtesy of this article.

Setup

git clone git@github.com:nicolas-ivanov/tf_seq2seq_chatbot.git

cd tf_seq2seq_chatbot

bash setup.sh

Run

Train a seq2seq model on a small (17 MB) corpus of movie subtitles:

python train.py

(this command will run the training on a CPU... GPU instructions are coming)

Test trained trained model on a set of common questions:

python test.py

Chat with trained model in console:

python chat.py

All configuration params are stored at tf_seq2seq_chatbot/configs/config.py

GPU usage

If you are lucky to have a proper gpu configuration for tensorflow already, this should do the job:

python train.py

Otherwise you may need to build tensorflow from source and run the code as follows:

cd tensorflow # cd to the tensorflow source folder

cp -r ~/tf_seq2seq_chatbot ./ # copy project's code to tensorflow root

bazel build -c opt --config=cuda tf_seq2seq_chatbot:train # build with gpu-enable option

./bazel-bin/tf_seq2seq_chatbot/train # run the built code

Requirements