Awesome

Adversarial AutoEncoder

Adversarial Autoencoder [arXiv:1511.05644] implemented with MXNet.

Requirements

- MXNet

- numpy

- matplotlib

- scikit-learn

- OpenCV

Unsupervised Adversarial Autoencoder

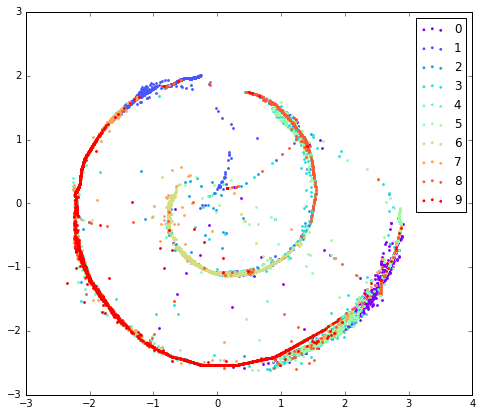

Please run aae_unsupervised.py for model training. Set task to unsupervised in visualize.ipynb to display the results. Notice the desired prior distribution of the 2-d latent variable can be one of {gaussian, gaussian mixture, swiss roll or uniform}. In this case, no label info is being used during the training process.

Some results:

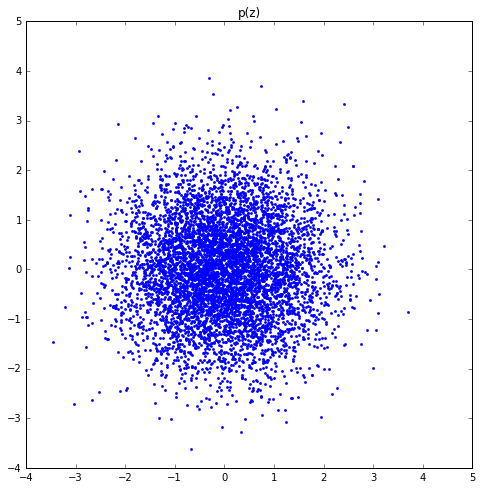

p(z) and q(z) with z_prior set to gaussian distribution.

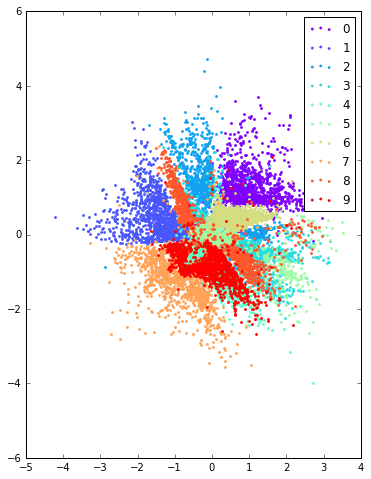

p(z) and q(z) with z_prior set to 10 gaussian mixture distribution.

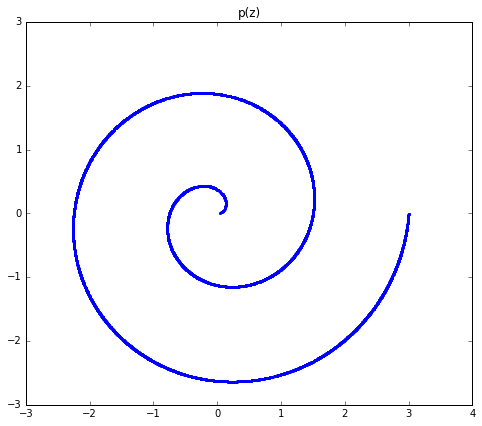

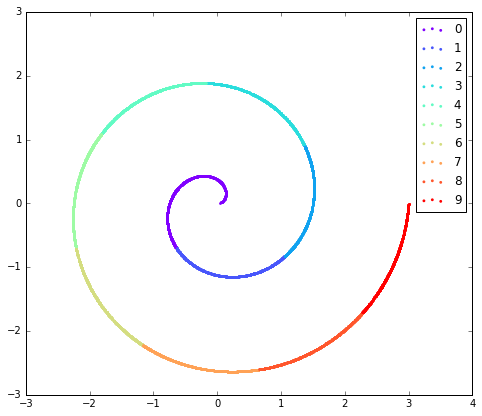

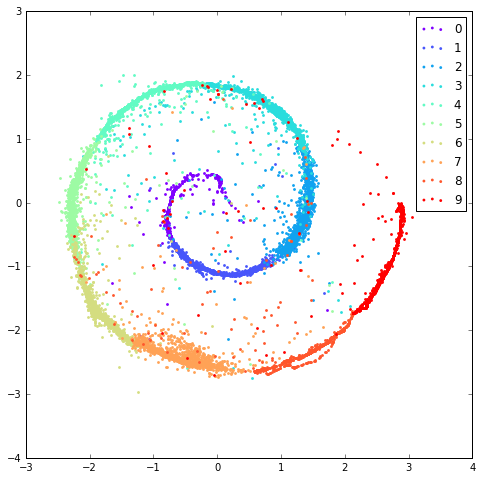

p(z) and q(z) with z_prior set to swiss roll distribution.

Supervised Adversarial Autoencoder

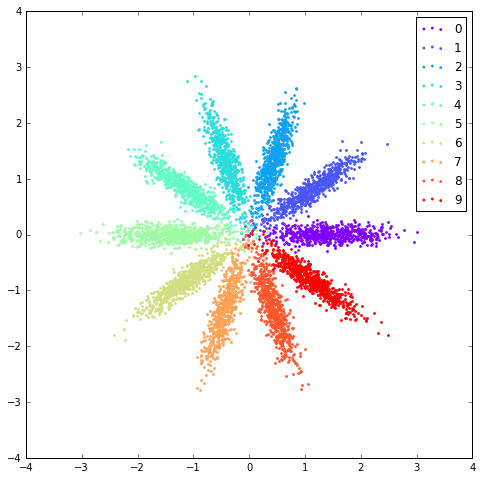

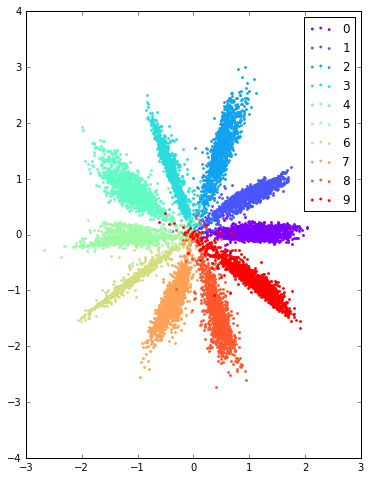

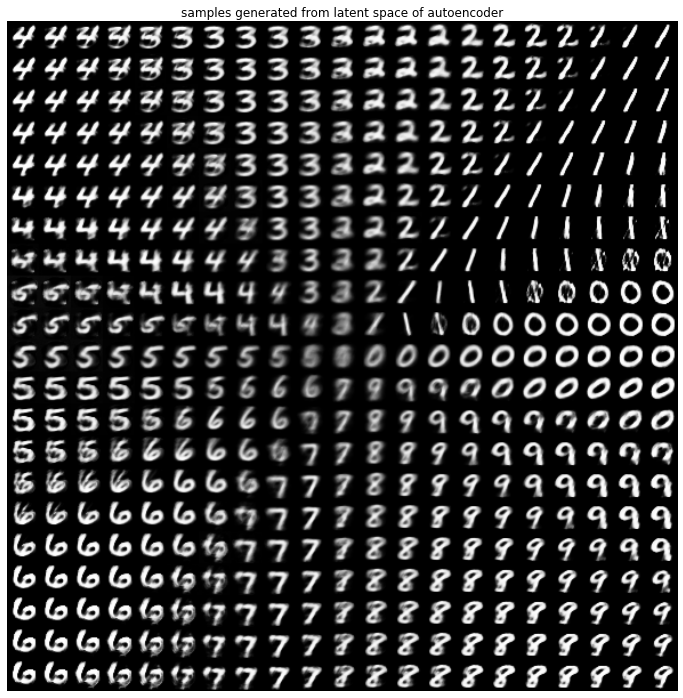

Please run aae_supervised.py for model training. Set task to supervised in visualize.ipynb to display the results. Notice the desired prior distribution of the 2-d latent variable can be one of {gaussian mixture, swiss roll or uniform}. In this case, label info of both real and fake data is being used during the training process.

Some results:

p(z), q(z) and output images from fake data with z_prior set to 10 gaussian mixture distribution.

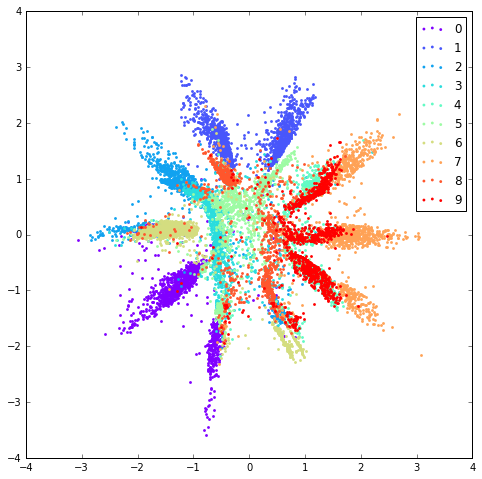

p(z) and q(z) with z_prior set to swiss roll distribution.

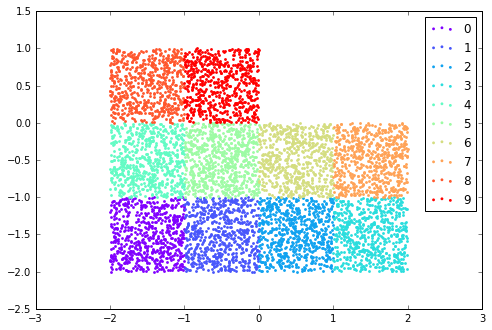

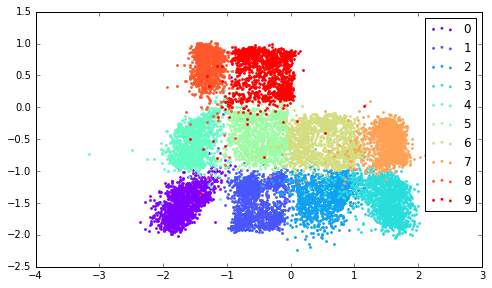

p(z) and q(z) with z_prior set to 10 uniform distribution.

Semi-Supervised Adversarial Autoencoder

Not implemented yet.