Awesome

SAMMO (📘User Guide)

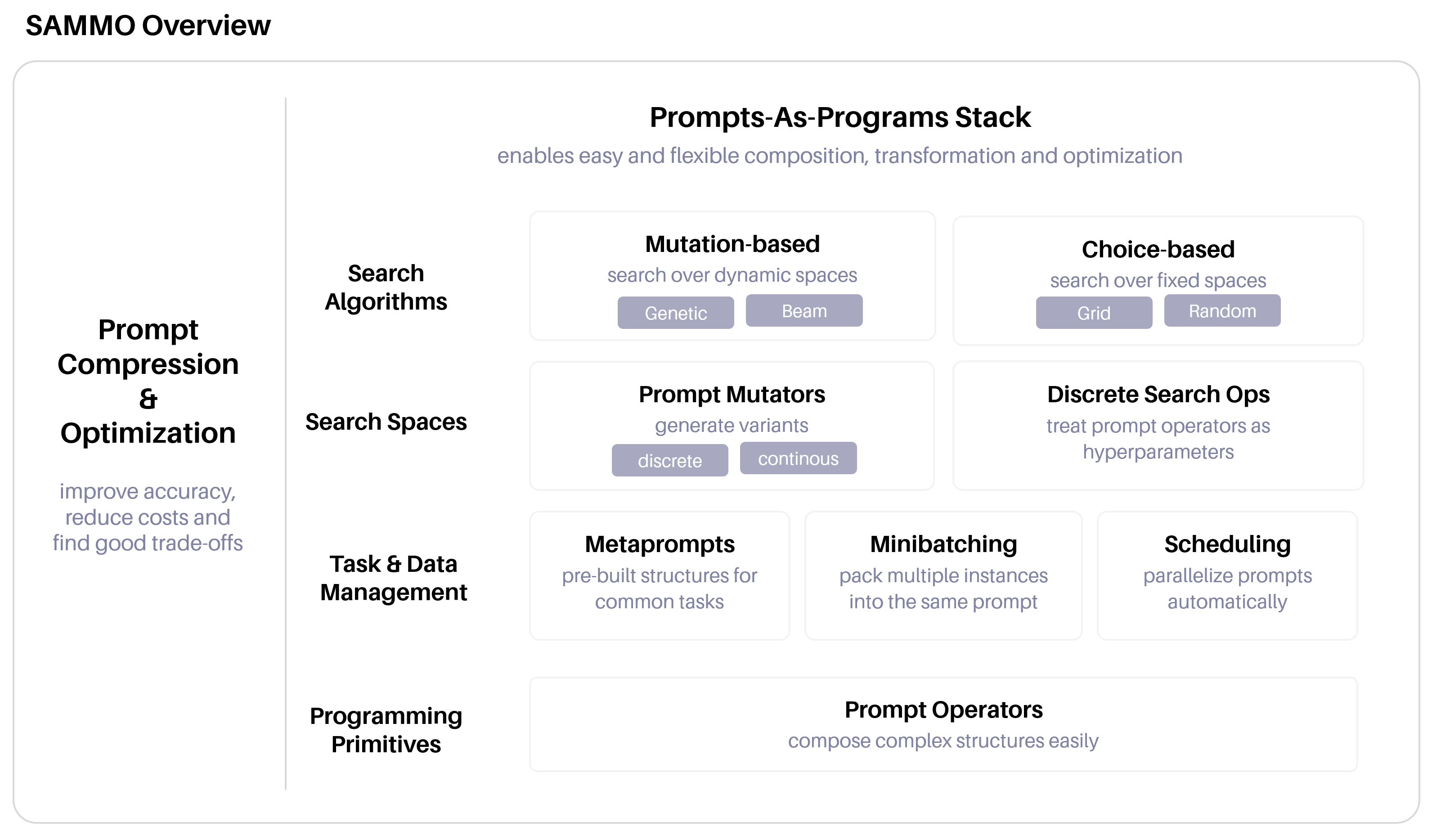

A flexible, easy-to-use library for running and optimizing prompts for Large Language Models (LLMs).

🎉 News

- Nov 13, 2024: Turn Markdown into prompt programs: First version of SAMMO express released

- Nov 1, 2024: Use CSS selectors to query and modify prompt programs!

- Oct 15, 2024: SAMMO now supports structured outputs!

How to Get Started

Go to the user guide for examples, how-tos, and API reference.

Just want to have a quick look? Try the live demo on Binder.

<!--start-->Install library only

pip install sammo

Install and run tutorials

Prerequisites

- Python 3.9+

The following commands will install sammo and jupyter and launch jupyter notebook. It's recommended that you create and activate a virtualenv prior to installing packages.

pip install sammo jupyter

# clone sammo to a local directory

git clone https://github.com/microsoft/sammo.git

cd sammo

# launch jupyter notebook and open tutorials directory

jupyter notebook --notebook-dir docs/tutorials

Example

This example shows how easy it is to optimize a prompt with SAMMO. The full example is in the user guide.

runner = OpenAIChat(model_id="gpt-3.5-turbo", api_config=API_CONFIG)

PROMPT_IN_MARKDOWN = """

# Instructions <!-- #instr -->

Convert the following user queries into a SQL query.

# Table

Users:

- user_id (INTEGER, PRIMARY KEY)

- name (TEXT)

- age (INTEGER)

- city (TEXT)

# Complete this

Input: {{{input}}}

Output:

"""

spp = MarkdownParser(PROMPT_IN_MARKDOWN).get_sammo_program()

mutation_operators = BagOfMutators(

Output(GenerateText(spp)),

Paraphrase("#instr"),

Rewrite("#instr", "Make this more verbose.\n\n {{{{text}}}}")

)

prompt_optimizer = BeamSearch(runner, mutation_operators, accuracy)

prompt_optimizer.fit(d_train)

prompt_optimizer.show_report()

Use Cases

SAMMO is designed to support

- Efficient data labeling: Supports minibatching by packing and parsing multiple datapoints into a single prompt.

- Prompt prototyping and engineering: Re-usable components and prompt structures to quickly build and test new prompts.

- Instruction optimization: Optimize instructions to do better on a given task.

- Prompt compression: Compress prompts while maintaining performance.

- Large-scale prompt execution: parallelization and rate-limiting out-of-the-box so you can run many queries in parallel and at scale without overwhelming the LLM API.

It is less useful if you want to build

- Interactive, agent-based LLM applications (→ check out AutoGen)

- Interactive, production-ready LLM applications (→ check out LangChain)

Licence

This project is licensed under MIT.

To cite this paper, you can use the following BibTeX entry:

@inproceedings{schnabel-neville-2024-symbolic,

title = "Symbolic Prompt Program Search: A Structure-Aware Approach to Efficient Compile-Time Prompt Optimization",

author = "Schnabel, Tobias and Neville, Jennifer",

booktitle = "Findings of the Association for Computational Linguistics: EMNLP 2024",

year = "2024",

url = "https://aclanthology.org/2024.findings-emnlp.37",

pages = "670--686"

}

Authors

SAMMO was written by Tobias Schnabel.

Contributing

This project welcomes contributions and suggestions. Most contributions require you to agree to a Contributor License Agreement (CLA) declaring that you have the right to, and actually do, grant us the rights to use your contribution. For details, visit https://cla.opensource.microsoft.com.

When you submit a pull request, a CLA bot will automatically determine whether you need to provide a CLA and decorate the PR appropriately (e.g., status check, comment). Simply follow the instructions provided by the bot. You will only need to do this once across all repos using our CLA.

This project has adopted the Microsoft Open Source Code of Conduct. For more information see the Code of Conduct FAQ or contact opencode@microsoft.com with any additional questions or comments.