Awesome

Sensor-Guided Optical Flow

Demo code for "Sensor-Guided Optical Flow", ICCV 2021

This code is provided to replicate results with flow hints obtained from LiDAR data.

At the moment, we do not plan to release training code.

[Project page] - [Paper] - [Supplementary]

Reference

If you find this code useful, please cite our work:

@inproceedings{Poggi_ICCV_2021,

title = {Sensor-Guided Optical Flow},

author = {Poggi, Matteo and

Aleotti, Filippo and

Mattoccia, Stefano},

booktitle = {IEEE/CVF International Conference on Computer Vision (ICCV)},

year = {2021}

}

Contents

Introduction

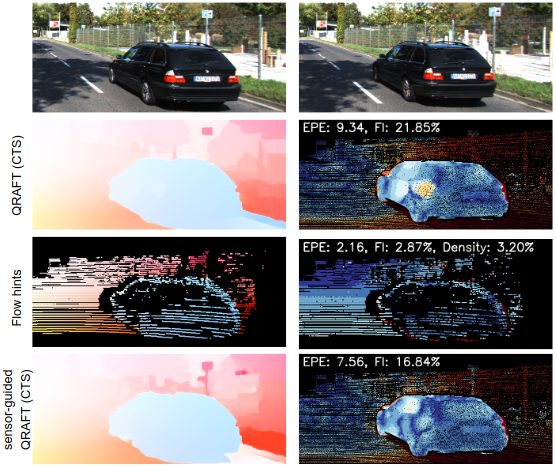

This paper proposes a framework to guide an optical flow network with external cues to achieve superior accuracy either on known or unseen domains. Given the availability of sparse yet accurate optical flow hints from an external source, these are injected to modulate the correlation scores computed by a state-of-the-art optical flow network and guide it towards more accurate predictions. Although no real sensor can provide sparse flow hints, we show how these can be obtained by combining depth measurements from active sensors with geometry and hand-crafted optical flow algorithms, leading to accurate enough hints for our purpose. Experimental results with a state-of-the-art flow network on standard benchmarks support the effectiveness of our framework, both in simulated and real conditions.

Installation

Install the project requirements in a new python 3 environment:

virtualenv -p python3 guided_flow_env

source guided_flow_env/bin/activate

pip install -r requirements.txt

Compile the guided_flow module, written in C (required for guided flow modulation):

cd external/guided_flow

bash compile.sh

cd ../..

Data

Download KITTI 2015 optical flow training set and precomputed flow hints. Place them under the data folder as follows:

data

├──training

├──image_2

├── 000000_10.png

├── 000000_11.png

├── 000001_10.png

├── 000001_11.png

...

├──flow_occ

├── 000000_10.png

├── 000000_11.png

├── 000001_10.png

├── 000001_11.png

...

├──hints

├── 000002_10.png

├── 000002_11.png

├── 000003_10.png

├── 000003_11.png

...

Weights

We provide QRAFT models tested in Tab. 4. Download the weights and unzip them under weights as follows:

weights

├──raw

├── C.pth

├── CT.pth

...

├──guided

├── C.pth

├── CT.pth

...

Usage

You are now ready to run the demo_kitti142.py script:

python demo_kitti142.py --model CTK --guided --out_dir results_CTK_guided/

Use --model to specify the weights you want to load among C, CT, CTS and CTK. By default, raw models are loaded, specify --guided to load guided weights and enable sensor-guided optical flow.

Note: Occasionally, the demo may run out of memory on ~12GB GPUs. The script saves intermediate results are saved in --out_dir. You can run again the script and it will skip all images for which intermediate results have been already saved in --out_dir, loading them from the folder. Remember to select a brand new --out_dir when you start an experiment from scratch.

In the end, the aforementioned command should print:

Validation KITTI: 2.08, 5.97

Numbers in Tab. 4 are obtained by running this code on a Titan Xp GPU, with PyTorch 1.7.0. We observed slight fluctuations in the numbers when running on different hardware (e.g., 3090 GPUs), mostly on raw models.

Contacts

m [dot] poggi [at] unibo [dot] it

Acknowledgments

Thanks to Zachary Teed for sharing RAFT code, used as codebase in our project.