Awesome

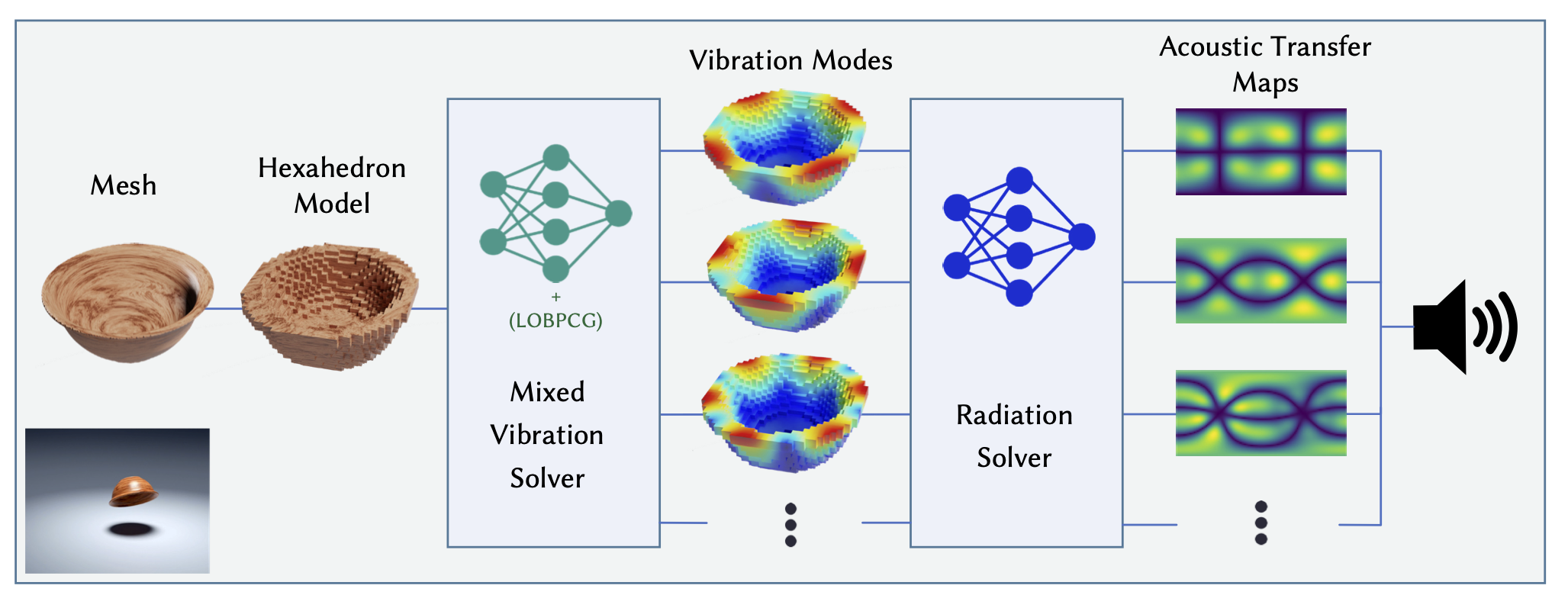

NeuralSound: Learning-based Modal Sound Synthesis with Acoustic Transfer

Introduction

Official Implementation of NeuralSound. NeuralSound includes a mixed vibration solver for modal analysis and a radiation network for acoustic transfer. This repository also include some code for the implementation of the DeepModal.

Environment

- Ubuntu, Python

- Matplotlib, Numpy, Scipy, PyTorch, tensorboard, PyCUDA, Numba, tqdm, meshio

- Bempp-cl

- Minkowski Engine

- PyTorch Scatter

For Ubuntu20.04, python 3.7, and CUDA 11.1, a tested script for environment setup is here.

Dataset

The dataset of meshes can be download from ABC Dataset. Assuming the meshes in obj format are in the dataset folder (dataset/mesh/*.obj). We provide scripts to generate synthetic data from the meshes for training and testing:

First, you should cd to the folder of the scripts.

cd dataset_scripts

The following scripts are used as python XXXX.py input_data output_data and you should ensure that the input data are not empty.

To generate voxel models and save to dataset/voxel/*.npy, run:

python voxelize.py "../dataset/mesh/*" "../dataset/voxel"

To generate eigenvectors and eigenvalues from modal analysis and save to dataset/eigen/*.npz, run:

python modalAnalysis.py "../dataset/voxel/*" "../dataset/eigen"

To generate dataset for our vibration solver and save to dataset/lobpcg/*.npz, run:

python lobpcgMatrix.py "../dataset/voxel/*" "../dataset/lobpcg"

To generate dataset for our radiation solver and save to dataset/acousticMap/*.npz, run:

python acousticTransfer.py "../dataset/eigen/*" "../dataset/acousticMap"

To generate dataset for DeepModal and save to dataset/deepmodal/*.npz, run:

python deepModal.py "../dataset/eigen/*.npz" "../dataset/deepmodal"

Training Vibration Solver

First you should split the dataset into training, testing, and validation sets.

cd dataset_scripts

python splitDataset.py "../dataset/lobpcg/*.pt"

Then you can train the vibration solver by running:

cd ../vibration

python train.py --dataset "../dataset/lobpcg" --tag default_tag --net defaultUnet --cuda 0

The log file is saved to vibration/runs/default_tag/ and the weights are saved to vibration/weights/default_tag.pt.

In the vibration solver, the matrix multiplication is implemented by a similar way of graph neural network. This is because of the consistency of convolution with matrix multiplication mentioned in our paper. We implemented the graph convolution with PyTorch Scatter. See function spmm_conv in src/classic/fem/project_util.py.

Training Radiation Solver

First you should split the dataset into training, testing, and validation sets.

cd dataset_scripts

python splitDataset.py "../dataset/acousticMap/*.npz"

Then you can train the radiation solver by running:

cd ../acoustic

python train.py --dataset "../dataset/acousticMap" --tag default_tag --cuda 0

The log file is saved to acoustic/runs/default_tag/ and the weights are saved to acoustic/weights/default_tag.pt. Visualized FFAT Maps are saved to acoustic/images/default_tag/ (In each image, above is ground-truth and below is prediction).

The network architecture is defined in src/net/acousticnet.py and is more compact than the original architecture in our paper while achieves similar performance.

Training DeepModal

First you should split the dataset into training, testing, and validation sets.

cd dataset_scripts

python splitDataset.py "../dataset/deepmodal/*.pt"

Then you can train the deepmodal by running:

cd ../deepmodal

python train.py --dataset "../dataset/deepmodal" --tag default_tag --net defaultUnet --cuda 0

The log file is saved to deepmodal/runs/default_tag/ and the weights are saved to deepmodal/weights/default_tag.pt. DeepModal is faster than the mixed vibration solver in NeuralSound, but the error is larger. This implementation of DeepModal use Sparse-Convolutional Neural Network (SCNN) rather than Convolutional Neural Network (CNN) in the original paper.

Blender Scripts

The scripts in folder blender are used to generate videos with modal sound.

Contact

For bugs and feature requests please visit GitHub Issues or contact Xutong Jin by email at jinxutong@pku.edu.cn.