Awesome

SparseProp: Temporal Proposals for Activity Detection.

This project hosts code for the framework introduced in the paper: Fast Temporal Activity Proposals for Efficient Detection of Human Actions in Untrimmed Videos

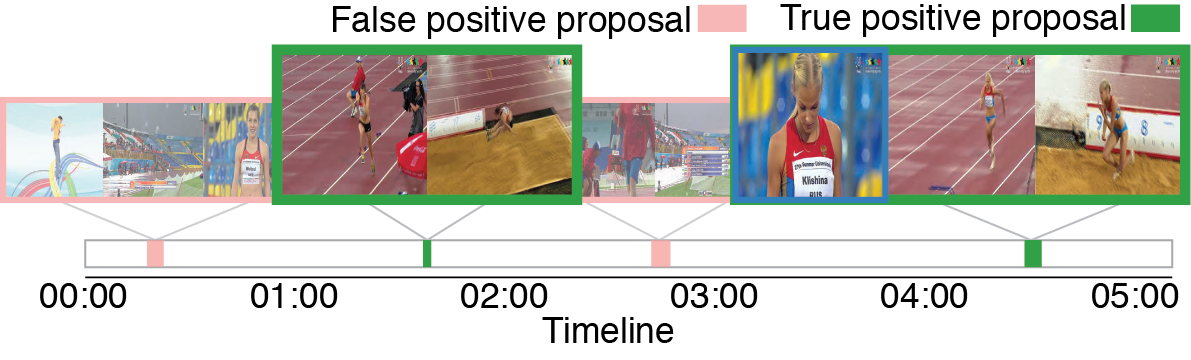

The paper introduces a new method that produces temporal proposals in untrimmed videos. The method is not only able to retrieve temporal locations of actions with high recall but also it generates proposals quickly.

If you find this code useful in your research, please cite:

@InProceedings{sparseprop,

author = {Caba Heilbron, Fabian and Niebles, Juan Carlos and Ghanem, Bernard},

title = {Fast Temporal Activity Proposals for Efficient Detection of Human Actions in Untrimmed Videos},

booktitle = {The IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

year = {2016}

}

What to know before starting to use SparseProp?

-

Dependencies: SparseProp is implemented in Python 2.7 including some third party packages: NumPy, Scikit Learn, H5py, Pandas, SPArse Modeling Software, Joblib.

-

Installation: If you installed all the dependencies correctly, simply clone this repository to install SparseProp:

git clone https://github.com/cabaf/sparseprop.git -

Feature Extraction: The feature extraction module is not included in this code. The current version of SparseProp supports only C3D as video representation.

What SparseProp provides?

-

Pre-trained model: Class-Induced model trained using videos from Thumos14 validation subset. Videos are represented using C3D features.

-

Pre-computed action proposals: The resulting temporal action proposals in the Thumos14 test set.

-

Code for retrieving proposals in new videos: Use the script

retrieve_proposals.pyto retrieve temporal segments in new videos. You will need to extract the C3D features by your own (Please read thesparseprop.featurefor guidelines on how to format the C3D features.). -

Code for training a new model: Use the script

run_train.pyto train a model using a new dataset (or features). For further information, please read the documentation in the script.

Try our demo!

SparseProp provides a demo that takes as input C3D features from a sample video and a Class-Induced pre-trained model to retrieve temporal segments that are likely to contain human actions. To try our demo, run the following command:

python retrieve_proposals.py data/demo_input.csv data/demo_c3d.hdf5 data/class_induced_thumos14.pkl data/demo_proposals.csv

The program above must generate a CSV (data/demo_proposals.csv) file containing the temporal proposals retrieved with an asociated score for each.

Windows users: Please be aware of this issue

Who is behind it?

|  |  |

|---|---|---|

| Main contributor | Co-Advisor | Advisor |

| Fabian Caba | Juan Carlos Niebles | Bernard Ghanem |

Do you want to contribute?

- Check the open issues or open a new issue to start a discussion around your new idea or the bug you found

- Fork the repository and make your changes!

- Send a pull request