Awesome

TAME (TAiwan Mixture of Experts) <br/>LLM for Taiwanese Culture across Diverse Domains

<p align="center"> ✍️ <a href="https://chat.twllm.com/" target="_blank">Online Demo</a> • 🤗 <a href="https://huggingface.co/collections/yentinglin/taiwan-llm-6523f5a2d6ca498dc3810f07" target="_blank">Model Collection</a> • 🐦 <a href="https://twitter.com/yentinglin56" target="_blank">Twitter/X</a> • 📃 <a href="https://arxiv.org/pdf/2311.17487.pdf" target="_blank">Model Paper</a> • 📃 <a href="https://arxiv.org/pdf/2403.20180" target="_blank">Eval Paper</a> • 👨️ <a href="https://yentingl.com/" target="_blank">Yen-Ting Lin</a> <br/><br/> <img src="https://cdn-uploads.huggingface.co/production/uploads/5df9c78eda6d0311fd3d541f/vlfv5sHbt4hBxb3YwULlU.png" width="500"> <br/> <a href="https://github.com/tatsu-lab/stanford_alpaca/blob/main/LICENSE"> <img src="https://img.shields.io/badge/Code%20License-Apache_2.0-green.svg"></a> <a href="https://github.com/tatsu-lab/stanford_alpaca/blob/main/DATA_LICENSE"> <img src="https://img.shields.io/badge/Data_License-CC%20By%20NC%204.0-red.svg"></a> <br/> Partnership with 和碩聯合科技, 長庚紀念醫院, 長春集團, 欣興電子, 律果, NVIDIA, 科技報橘 </p>🌟 Demo Site

Try out Llama-3-Taiwan interactively at twllm.com

⚔️ Chatbot Arena

Participate in the exciting Chatbot Arena and compete against other chatbots!

🚀 Quick Start for Fine-tuning

Using Axolotl for fine-tuning:

# Run the axolotl docker image

docker run --gpus '"all"' --rm -it winglian/axolotl:main-latest

# Preprocess datasets (optional but recommended)

CUDA_VISIBLE_DEVICES="" python -m axolotl.cli.preprocess example_training_config_for_finetuning_twllm.yaml

# Fine-tune

accelerate launch -m axolotl.cli.train example_training_config_for_finetuning_twllm.yaml

Check out the example_training_config_for_finetuning_twllm.yaml file for detailed training configuration and parameters. For more training framework information, visit Axolotl's GitHub repository.

🚀 We're excited to introduce Llama-3-Taiwan-70B! Llama-3-Taiwan-70B is a 70B parameter model finetuned on a large corpus of Traditional Mandarin and English data using the Llama-3 architecture. It demonstrates state-of-the-art performance on various Traditional Mandarin NLP benchmarks.

The model was trained with NVIDIA NeMo™ Framework using the NVIDIA Taipei-1 built with NVIDIA DGX H100 systems.

The compute and data for training Llama-3-Taiwan-70B was generously sponsored by Chang Gung Memorial Hospital, Chang Chun Group, Legalsign.ai, NVIDIA, Pegatron, TechOrange, and Unimicron (in alphabetical order).

We would like to acknowledge the contributions of our data provider, team members and advisors in the development of this model, including shasha77 for high-quality YouTube scripts and study materials, Taiwan AI Labs for providing local media content, Ubitus K.K. for offering gaming content, Professor Yun-Nung (Vivian) Chen for her guidance and advisement, Wei-Lin Chen for leading our pretraining data pipeline, Tzu-Han Lin for synthetic data generation, Chang-Sheng Kao for enhancing our synthetic data quality, and Kang-Chieh Chen for cleaning instruction-following data.

Model Summary

Llama-3-Taiwan-70B is a large language model finetuned for Traditional Mandarin and English users. It has strong capabilities in language understanding, generation, reasoning, and multi-turn dialogue. Key features include:

- 70B parameters

- Languages: Traditional Mandarin (zh-tw), English (en)

- Finetuned on High-quality Traditional Mandarin and English corpus covering general knowledge as well as industrial knowledge in legal, manufacturing, medical, and electronics domains

- 8K context length

- Open model released under the Llama-3 license

Training Details

- Training Framework: NVIDIA NeMo, NVIDIA NeMo Megatron

- Inference Framework: NVIDIA TensorRT-LLM

- Base model: Llama-3 70B

- Hardware: NVIDIA DGX H100 on Taipei-1

- Context length: 8K tokens (128k version)

- Batch size: 2M tokens per step

Evaluation

Checkout Open TW LLM Leaderboard for full and updated list.

Numbers are 0-shot by default.

^ taken the closet matching numbers from original dataset.

Needle in a Haystack Evaluation

The "Needle in a 出師表" evaluation tests the model's ability to locate and recall important information embedded within a large body of text, using the classic Chinese text 《出師表》 by 諸葛亮.

To run the evaluation, use the script.

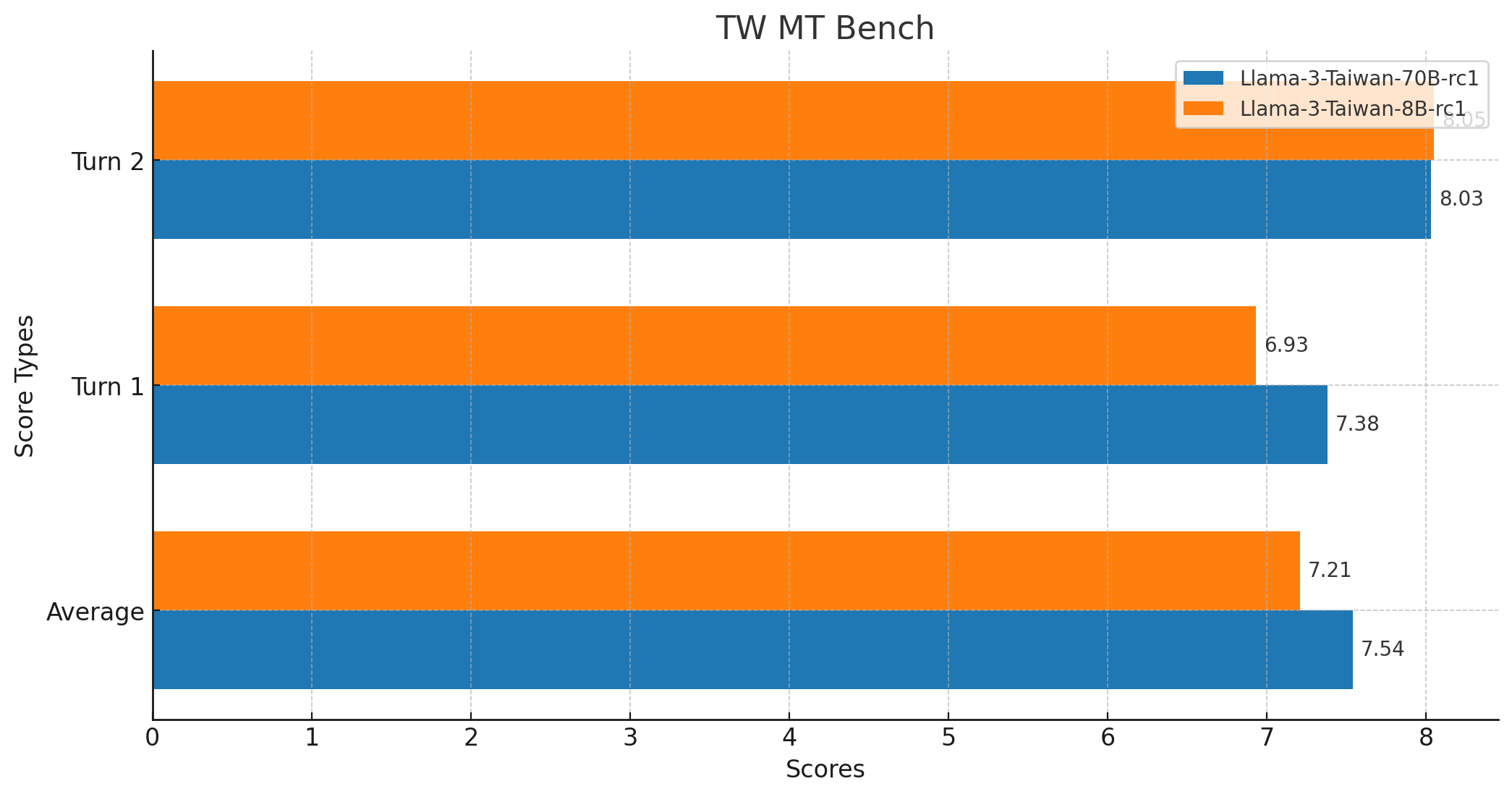

TW MT-Bench Score

- Average Score: 7.5375

- Maximum Score: 10

- Minimum Score: 1

- Median Score: 9.0

- Standard Deviation: 3.0349783771882133

- Total Number of Scores: 160

- Model resopnse

- GPT-4 Eval

- Code fork from

mtkresearch/TCEvalwith bug fixing

Use Cases

Llama-3-Taiwan-70B can be applied to a wide variety of NLP tasks in Traditional Mandarin and English, including:

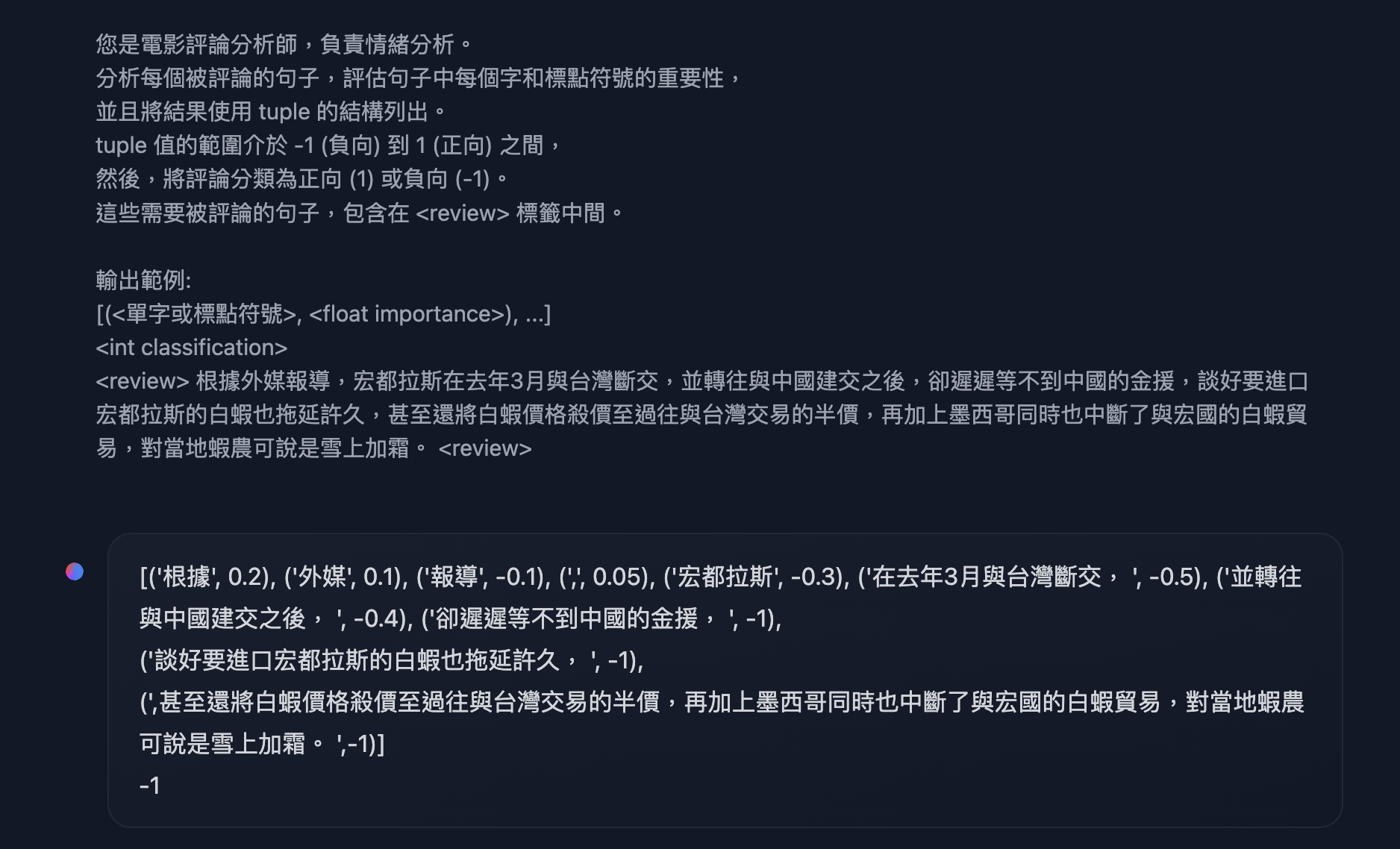

<details> <summary>1. 多輪對話</summary> <pre> System: You are an AI assistant called Twllm, created by TAME (TAiwan Mixture of Expert) project. User: 嗨,你好! Assistant: 你好!我今天可以如何協助你? User: 就想聊天而已.... Assistant: 當然,我很樂意和你聊天!有什麼特別想談論的嗎? </pre> </details> <details> <summary>2. RAG(檢索增強生成)</summary>Demo: 可以打開 Search Web on twllm.com

If you are interested in function-calling, I strongly recommend using constrained decoding to turn on json mode.

Example from HW7 in INTRODUCTION TO GENERATIVE AI 2024 SPRING from HUNG-YI LEE (李宏毅)

Get Started

Caveat: Set these as stop tokens: ["USER:", "ASSISTANT:", "<|im_end|>", "<|eot_id|>", "<|end_of_text|>"]

Hugging Face Transformers library

You can use Llama-3-Taiwan-70B with the Hugging Face Transformers library:

# Use a pipeline as a high-level helper

from transformers import pipeline

messages = [

{"role": "system", "content": "You are an AI assistant called Twllm, created by TAME (TAiwan Mixture of Expert) project."},

{"role": "user", "content": "你好,請問你可以完成什麼任務?"},

{"role": "assistant", "content": "你好,我可以幫助您解決各種問題、提供資訊並協助完成多種任務。例如:回答技術問題、提供建議、翻譯文字、尋找資料或協助您安排行程等。請告訴我如何能幫助您。"},

{"role": "user", "content": "太棒了!"},

]

pipe = pipeline("text-generation", model="yentinglin/Llama-3-Taiwan-70B-Instruct")

pipe(messages)

vLLM

Start the server

export NUM_GPUS=4

export PORT=8000

docker run \

-e HF_TOKEN=$HF_TOKEN \

--gpus '"device=0,1,2,3"' \

-v ~/.cache/huggingface:/root/.cache/huggingface \

-p "${PORT}:8000" \

--ipc=host \

vllm/vllm-openai:v0.4.0.post1 \

--model "yentinglin/Llama-3-Taiwan-70B-Instruct" \

-tp "${NUM_GPUS}"

Sample client code, or you can use anything OpenAI-API compatible clients

# pip install "openai>=1.0.0"

from openai import OpenAI

# Set OpenAI's API key and API base to use vLLM's API server.

openai_api_key = "EMPTY"

openai_api_base = "http://localhost:8000/v1"

client = OpenAI(

api_key=openai_api_key,

base_url=openai_api_base,

)

chat_response = client.chat.completions.create(

model="yentinglin/Llama-3-Taiwan-70B-Instruct",

messages=[

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": "Tell me a joke."},

]

)

print("Chat response:", chat_response)

Enjoy exploring the capabilities of Llama-3-Taiwan-70B! We look forward to seeing what you create with this powerful open-source model. If you have any questions or feedback, please let us know.

Citation

@article{DBLP:journals/corr/abs-2311-17487,

author = {Yen{-}Ting Lin and

Yun{-}Nung Chen},

title = {Taiwan {LLM:} Bridging the Linguistic Divide with a Culturally Aligned

Language Model},

journal = {CoRR},

volume = {abs/2311.17487},

year = {2023},

url = {https://doi.org/10.48550/arXiv.2311.17487},

doi = {10.48550/ARXIV.2311.17487},

eprinttype = {arXiv},

eprint = {2311.17487},

timestamp = {Tue, 05 Dec 2023 14:40:42 +0100},

biburl = {https://dblp.org/rec/journals/corr/abs-2311-17487.bib},

bibsource = {dblp computer science bibliography, https://dblp.org}

}

@article{DBLP:journals/corr/abs-2403-20180,

author = {Po{-}Heng Chen and

Sijia Cheng and

Wei{-}Lin Chen and

Yen{-}Ting Lin and

Yun{-}Nung Chen},

title = {Measuring Taiwanese Mandarin Language Understanding},

journal = {CoRR},

volume = {abs/2403.20180},

year = {2024},

url = {https://doi.org/10.48550/arXiv.2403.20180},

doi = {10.48550/ARXIV.2403.20180},

eprinttype = {arXiv},

eprint = {2403.20180},

timestamp = {Wed, 10 Apr 2024 17:37:45 +0200},

biburl = {https://dblp.org/rec/journals/corr/abs-2403-20180.bib},

bibsource = {dblp computer science bibliography, https://dblp.org}

}

Previous Taiwan-LLM Releases

The Taiwan LLM Initiative was started by Yenting Lin (林彥廷) in July 2023.

- Version 1.0 was released in August 2023.

- Version 2.0 was released in October 2023, sponsored by Ubitus K.K.

These models are designed to support Traditional Mandarin and are optimized for Taiwanese culture and related applications. For more detailed information about our models, including demos, features, and examples, please visit our Hugging Face collection.