Awesome

Table of Contents:

- ERCNN-DRS Urban Change Monitoring

- Training/Validation Datasets

- Transfer Learning

- Paper and Citation

- Posters

- Other

- Contact

- Acknowledgments

- License

ERCNN-DRS Urban Change Monitoring

This project hosts the Ensemble of Recurrent Convolutional Neural Networks for Deep Remote Sensing (ERCNN-DRS) used for urban change monitoring with ERS-1/2 & Landsat 5 TM, and Sentinel 1 & 2 remote sensing mission pairs. It was developed for demonstration purposes (study case) in the ESA Blockchain ENabled DEep Learning for Space Data (BLENDED)<sup>1</sup> project. Two neural network models were trained for the two eras (ERS-1/2 & Landsat 5 TM: 1991-2011, and Sentinel 1 & 2: 2017-2021).

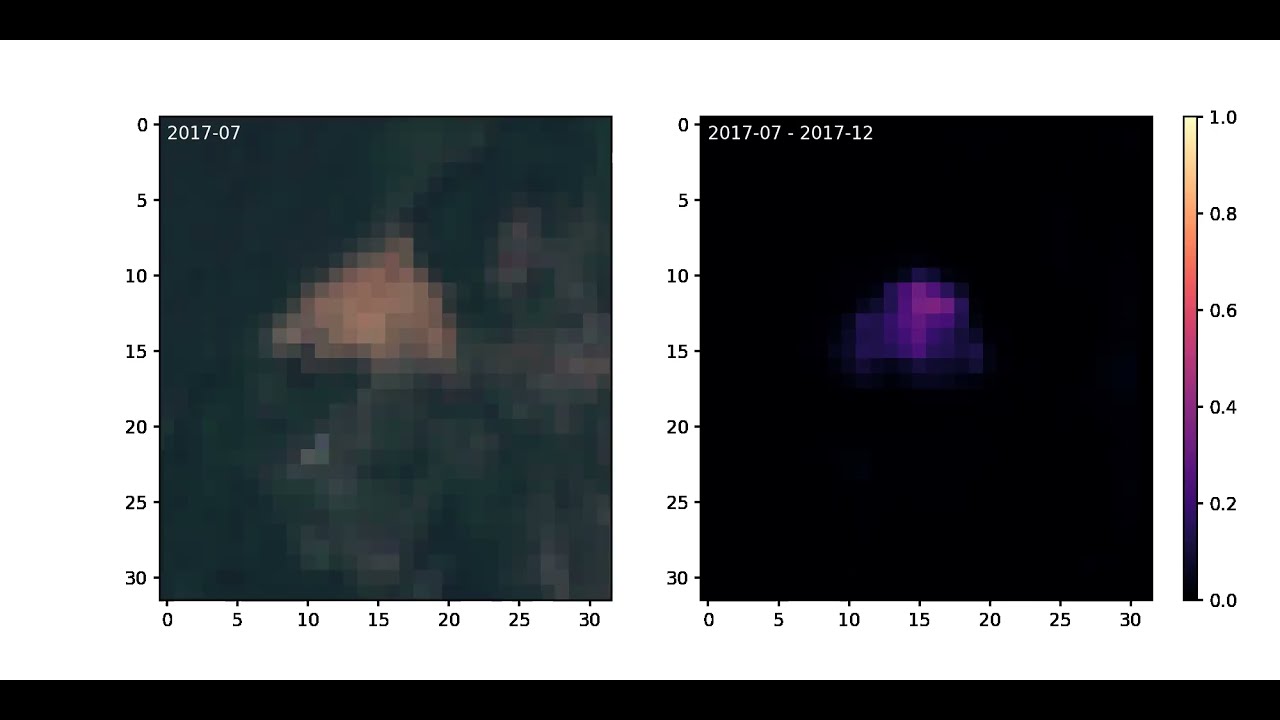

Below is an example of ERCNN-DRS continuous urban change monitoring with Sentinel 1 & 2 data (AoI of Liège). It uses deep-temporal multispectral and SAR remote sensing observations to make predictions of urban changes over a time window of half a year (right). The higher the values, the more likely an urban change was detected within that window. Left are true color observations of start of the respective window for orientation only. [external link to YouTube]

The repository at hand contains only the study case of the BLENDED platform. It can be run on any arbitrary environment or within the BLENDED platform. For the former, IPFS (InterPlanetary File System) is not needed and data can be stored on any file system. The following figure shows the core of the study case with two phases: training with blue arrows and inference (i.e. prediction) with orange arrows. Green arrows denote both training and inference phases.

<!----> <p align="center"> <img src="./collateral/blended_platform.png" /> </p>Dashed boxes (processing steps) are not contained here. The actors denote different roles in the BLENDED platform (model owner, data owner and result owner) which are also not relevant for the study case.

Features

- Trained with SAR and optical multispectral observation time series of hundreds up to thousands of observations (deep-temporal)

- Demonstrates usage for two mission pairs:

- ERS-1/2 & Landsat 5 TM (1991-2011), and

- Sentinel 1 & 2 (2017-now)

- Predicts changes which happened in one time window:

- 1 year for ERS-1/2 & Landsat 5 TM, and

- 6 months for Sentinel 1 & 2

- The long mission times allow monitoring of urban changes over larger time frames

Usage

Pre-Requisites

For either era, SAR (separated by ascending and descending orbit directions) and multispectral optical observations are needed as EOPatches, a format introduced by eo-learn.

The AoI shapefiles can be found in the respective subdirectories:

- ERS-1/2 & Landsat 5 TM:

Rotterdam.shp,Liege.shp, andLimassol.shp(EPSG:3035) - Sentinel 1 & 2:

Rotterdam.shp,Liege.shp, andLimassol.shp(EPSG:4326)

Pre-Processing

<!----> <p align="center"> <img src="./collateral/pre-processing.png" /> </p>Before training, observations from EOPatches need to be processed in two steps:

- Temporally stacking, assembling and tiling (creates temporary TFRecord files)

- Windowing and labeling (output: TFRecord files)

This is realized with the dedicated solution called rsdtlib. It also includes the download of Sentinel 1 & 2 observations from Sentinel-Hub to provide a turnkey solution. See esp. the ERCNN-DRS related scripts for instructions on how to pre-process.

Pre-processed data (steps 1 and 2) as used in our work can be found in section Training/Validation Data below.

Deprecation Note:

The earlier pre-processing scripts are superseded by rsdtlib. For historic reasons, the scripts can be found here to implement the two steps:

Temporally stacking, assembling and tiling (creates temporary TFRecord files):ERS-1/2 & Landsat 5 TM:1_tstack_assemble_tile.pySentinel 1 & 2:1_tstack_assemble_tile.py

Windowing and labeling (output: TFRecord files):ERS-1/2 & Landsat 5 TM:2_generate_windows_slabels.pySentinel 1 & 2:2_generate_windows_slabels.py

Model Architecture

<!----> <p align="center"> <img src="./collateral/model_architecture.png" /> </p>Training

Training is executed on the windowed and labeled TFRecord files:

Inference

We provide pre-trained networks which can be used right away:

- ERS-1/2 & Landsat 5 TM:

best_weights_ercnn_drs.hdf5 - Sentinel 1 & 2:

best_weights_ercnn_drs.hdf5

Examples

ERS-1/2 & Landsat 5 TM

ERS-1/2 & Landsat 5 TM example of Liège. Top row are Landsat 5 TM true color observations (left, right) with change prediction (middle). Bottom rows are corresponding very-high resolution imagery from Google Earth(tm), (c) 2021 Maxar Technologies with predictions superimposed in red.

<!----> <p align="center"> <img src="./collateral/ers12ls5_example.png" /> </p>Series of predictions from above example.

<!----> <p align="center"> <img src="./collateral/ers12ls5_example_series.png" /> </p>Sentinel 1 & 2

Sentinel 1 & 2 example of Liège. Top row are Sentinel 2 true color observations (left, right) with change prediction (middle). Bottom rows are corresponding very-high resolution imagery from Google Earth(tm), (c) 2021 Maxar Technologies with predictions superimposed in red.

<!----> <p align="center"> <img src="./collateral/s12_example.png" /> </p>Series of predictions from above example.

<!----> <p align="center"> <img src="./collateral/s12_example_series.png" /> </p>Training/Validation Datasets

Thanks to the data providers, we can make available the training/validation datasets on Google Drive. They are separated by era/mission generation and pre-processing steps, see list below.

In most cases you might be interested in the training sets only, use "Windowing and labeling" then.

ATTENTION, these files are large: 24-206 GB

ERS-1/2 & Landsat 5 TM pre-processing steps:

- Temporally stacking, assembling and tiling:

- Rotterdam & Limassol:

tstack_ers12ls5_Rotterdam_Limassol.tar[38 GB] - Liège:

tstack_ers12ls5_Liege.tar[24 GB]

- Rotterdam & Limassol:

- Windowing and labeling (ready for training):

- Rotterdam & Limassol:

training_ers12ls5.tar[64 GB]

- Rotterdam & Limassol:

Sentinel 1 & 2 pre-processing steps:

- Temporally stacking, assembling and tiling:

- Rotterdam & Limassol:

tstack_s12_Rotterdam_Limassol.tar[133 GB] - Liège:

tstack_s12_Liege.tar[66 GB]

- Rotterdam & Limassol:

- Windowing and labeling (ready for training):

- Rotterdam & Limassol

training_s12.tar[206 GB]

- Rotterdam & Limassol

Transfer Learning

We have demonstrated how to improve the prediction performance with a novel low-effort supervised transfer learning method. This method, results and collaterals can be found in the subdirectory transfer.

Paper and Citation

The full paper can be found at MDPI Remote Sensing.

@Article{rs13153000,

AUTHOR = {Zitzlsberger, Georg and Podhorányi, Michal and Svatoň, Václav and Lazecký, Milan and Martinovič, Jan},

TITLE = {Neural Network-Based Urban Change Monitoring with Deep-Temporal Multispectral and SAR Remote Sensing Data},

JOURNAL = {Remote Sensing},

VOLUME = {13},

YEAR = {2021},

NUMBER = {15},

ARTICLE-NUMBER = {3000},

URL = {https://www.mdpi.com/2072-4292/13/15/3000},

ISSN = {2072-4292},

DOI = {10.3390/rs13153000}

}

Posters

Supercomputing 2021, St. Louis/USAor copy in current repositoryLiving Planet Symposium 2022, Bonn/Germany

Other

In case you require Landsat 5 TM Top of Atmosphere (TOA) Correction, we have "extended" an existing project to support it: rio-toa_ls5.

Contact

Should you have any feedback or questions, please contact the main author: Georg Zitzlsberger (georg.zitzlsberger(a)vsb.cz).

Acknowledgments

This research was funded by ESA via the Blockchain ENabled DEep Learning for Space Data (BLENDED) project (SpaceApps Subcontract No. 4000129481/19/I-IT4I) and by the Ministry of Education, Youth and Sports from the National Programme of Sustainability (NPS II) project “IT4Innovations excellence in science - LQ1602” and by the IT4Innovations Infrastructure, which is supported by the Ministry of Education, Youth and Sports of the Czech Republic through the e-INFRA CZ (ID:90140) via the Open Access Grant Competition (OPEN-21-31).

The authors would like to thank ESA for funding the study as part of the BLENDED project<sup>1</sup> and IT4Innovations for funding the compute resources via the Open Access Grant Competition (OPEN-21-31). Furthermore, the authors would like to thank the data providers (USGS, ESA, Sentinel Hub and Google) for making remote sensing data freely available:

- Landsat 5 TM courtesy of the U.S. Geological Survey.

- ERS-1/2 data provided by the European Space Agency.

- Contains modified Copernicus Sentinel data 2017-2021 processed by Sentinel Hub (Sentinel 1 & 2).

The authors would finally like to thank the BLENDED project partners for supporting our work as a case study of the developed platform.

License

This project is made available under the GNU General Public License, version 3 (GPLv3).