Awesome

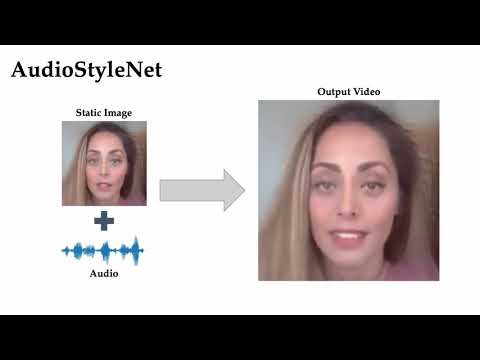

AudioStyleNet - Controlling StyleGAN through Audio

This repository contains the code for my master thesis on talking head generation by controlling the latent space of a pretrained StyleGAN model. The work was done at the Visual Computing and Artificial Intelligence Group at the Technical University of Munich under the supervision of Matthias Niessner and Justus Thies.

Link to thesis: https://drive.google.com/file/d/1-p-aRpTGHM3Zz6LOIMPID34HvZOeeVJT/view?usp=sharing

<p align="center"> <img src="git_material/sample_video.gif"> </p>Video

See the demo video for more details and results.

Set-up

The code uses Python 3.7.5 and it was tested on PyTorch 1.4.0 with cuda 10.1. (This project requires a GPU with cuda support.)

Clone the git project:

$ git clone https://github.com/FeliMe/AudioStyleNet.git

Create two virtual environments:

$ conda create -f environment.yml

$ conda create -n deepspeech python=3.6

Install requirements:

$ conda activate audiostylenet

$ pip install -r requirements.txt

$ conda activate deepspeech

$ pip install -r deepspeech/deepspeech_requirements.txt

Install ffmpeg

sudo apt install ffmpeg

Demo

Download the pretrained AudioStyleNet model and the StyleGAN model from Google Drive and place them in the model/ folder.

run

$ python run_audiostylenet.py

A Google Colab Demo notebook can be found here: https://colab.research.google.com/drive/17o1yFz9F6XmIrB6h99u1NUxbj8eFWFmR?usp=sharing

<!-- ## Use your own images First, align your image or video: ``` $ python align_face.py --files <path to image or video> --out_dir data/images/ ``` Project the aligned images into the latent space of StyleGAN using ``` $ python projector.py --input <path to image(s)> --output_dir data/images/ ``` -->Use your own audio

To test the model with your own audio, first convert your audio to waveform and then run the following:

$ cd deepspeech

$ conda activate deepspeech

$ python run_voca_feature_extraction.py --audiofiles <path to .wav file> --out_dir ../data/audio/

$ conda deactivate

Then run python run_audiostylenet.py with adapted arguments.